At OVHcloud, we recently made a change to our internal Observability stack. After testing and comparing the different solutions on the market, we opted for on open source solution. With this blog post, we’re starting a series of articles to provide feedback on our selection process and what we’ve learned along the way. Our mission was to find an horizontally scalable, highly available, multi-tenant, long-term storage for Prometheus, we begin this series with an introduction to Prometheus remote storage…

Over the last decade Prometheus has become one of the standard for Observability. It’s core concept is well suited for today technological use cases and it makes sense that open source community loves it. While Prometheus does a lot of thing really well when it comes to long term storage users must find a solution. This blog post serie discuss Prometheus’s remote storages, the technical challenges they aim to solve and more importantly we discuss how to pick the right one for you.

What is a remote storage?

Prometheus can be configured to read or write to a remote storage on top of its local storage. This allow it to support long-terme storage of users data. The two features are called remote_read and remote_write.

With remote_read configured, Prometheus will answer read queries with data from the remote storage. The remote_write is responsible for shipping samples to the remote storage. Both of them are extremely useful and highly configurable.For the rest of this blog post let’s focus on remote write.

Whether you are a cloud provider or building an in-house Observability it is not always appropriate nor possible to connect to your customers infrastructure to extract data.

With a remote write approach customers can have a strict control on what comes in/out of the infrastructure. We could argue that IPtables coupled with authentication is secure enough but this is still one more door to keep an eye on. With tight security taken into account we understand that remote write makes a lot of sense from a service provider point of view.

Now that we know that we want a remote write compatible storage we must take into account that not all remote storages are equal. The list of solution keeps growing every day, let’s see if we can differentiate them.

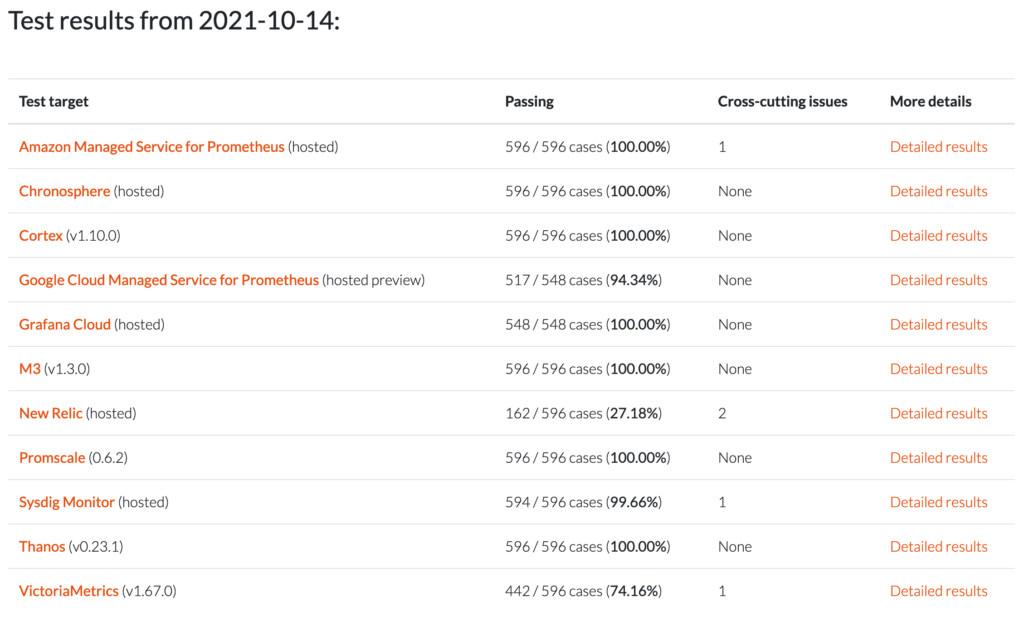

When writing metrics to a remote storage it is because we want to read then back later. Most Observability use cases imply writing down tons of data that will be queried afterwards. PromQL is the query language use to query Prometheus and therefore associated remote storage. It would make sense to check how PromQL compliant the solutions are. Fear not, Prometheus community is already tackling this question for us with PromQL Compliance

As you can, see most remote storage are 100% compliant with Prometheus results. Good news. This means users have a plethora of

However, readers must not under estimate this point. Indeed compliance impacts what you can query from the backend, how you can query it and, the accuracy of a result. It might not be trivial to reach full compliance and to stay compliant. Maintainers might also choose to not be compliant and explain why.

Prometheus world grows in adoption and under active development. If a solution is compatible today there is no guarantee it’ll stay compatible tomorrow.

Which bring us to the second point, the community. How healthy, large and active are the community behind each software?

Is it easy to contact them? Discuss issues? Propose feature and PRs? We tend to take granted the fact that PRs will be reviewed, that we’ll found someone to help us troubleshoot a bug but this is not necessarily the case.

Features set

To better address the technical challenges that are your own you must pick the solution that have the features you need. If you need multi tenancy check that point. If you need to downsample your data add this to your checklist. Don’t be shy, dig a little deeper. Test the feature look for its limitation. Tests are the only way to be able to make an informed decision.

To give you an idea you might want to have a look at the following features:

- multi tenancy

- rate limiting

- deduplication

- deletion

- downsampling

Scalability

Nowadays the word scalability is present almost everywhere. How well each remote storage scale? Can you write 2M samples/sec? Can you answer 1M queries/sec? Can you have 200M active series in total? 1B active series? Per tenant?

You can have a rough understanding of the bottleneck by looking at the architecture diagram. But to have a crystal clear answer there is only one way, you need to make a proof of concept.

By the way, you can even try one remote storage right now on our managed k8s. Most of the open source remote storage offer helm charts or operator to do so: VictoriaMetrics, Timescale, Mimir.

Cost

Along scalability comes tco which stand for Total Cost of Ownership. This boil down to how expensive a solution, infrastructure can be when you take all cost into account. For remote storage, on top of the team operating the infrastructure we must take into account the aforementioned infrastructure. All technical solution relies on 4 categories: trained engineers, compute resources, network and… Storage. Nevertheless, it is critical to take it into account all aspect of the target solution. Otherwise be ready for a surprise at the end of the month.

Conclusion

As we have demonstrate, we have a lot of technical solutions to address long term storage. However before putting one solution in production we need to thoroughly identify and assess all trade offs. In the next posts we will have a look on how to get to know your remote storage, bench it, break it.

After 10 years as a Sysadmin in High Performance Computing, Wilfried Roset is now part of OVHcloud as Engineering Manager for their Databases product Unit. He focuses on industrialization, reliability and performances for both internal and public clusters offers.