In our previous posts about choosing a Prometheus remote storage we have seen how to set up a benchmarking infrastructure and how to benchmark promql performance. We have been able to obtain results but the whole benchmark is flawned in many ways:

- it’s expensive as you need to spawn more than necessary to assess a particular point of your remote storage.

- it’s hard to reproduce 100% the same setup, even with the same configuration and software version you will have a similar result but not exactly the same.

- you’re not always benchmarking what you think you are. We have spent couple of time troubleshoot performance issue which where in prometheus or haproxy configuration.

- it focus mainly on the write path without stress from the read path which is not realistic.

This blog post discuss how we should have benchmark our remote storage.

How to do a good benchmark? K6 to the rescue

A good benchmark need to be accurate and reproducible. More over for our usecase we want to have a tool who takes into account both Prometheus’s read and write path. Finally, we need to be able to remove all unnecessary pieces. This way we are able to focus on the remote storage only.

Such software could be a project on its own but fortunately for us there is one opensource solution for that: K6

K6 is a general purpose modern load testing which can be extended with module to support Prometheus remote storage. Sounds interesting don’t you think?

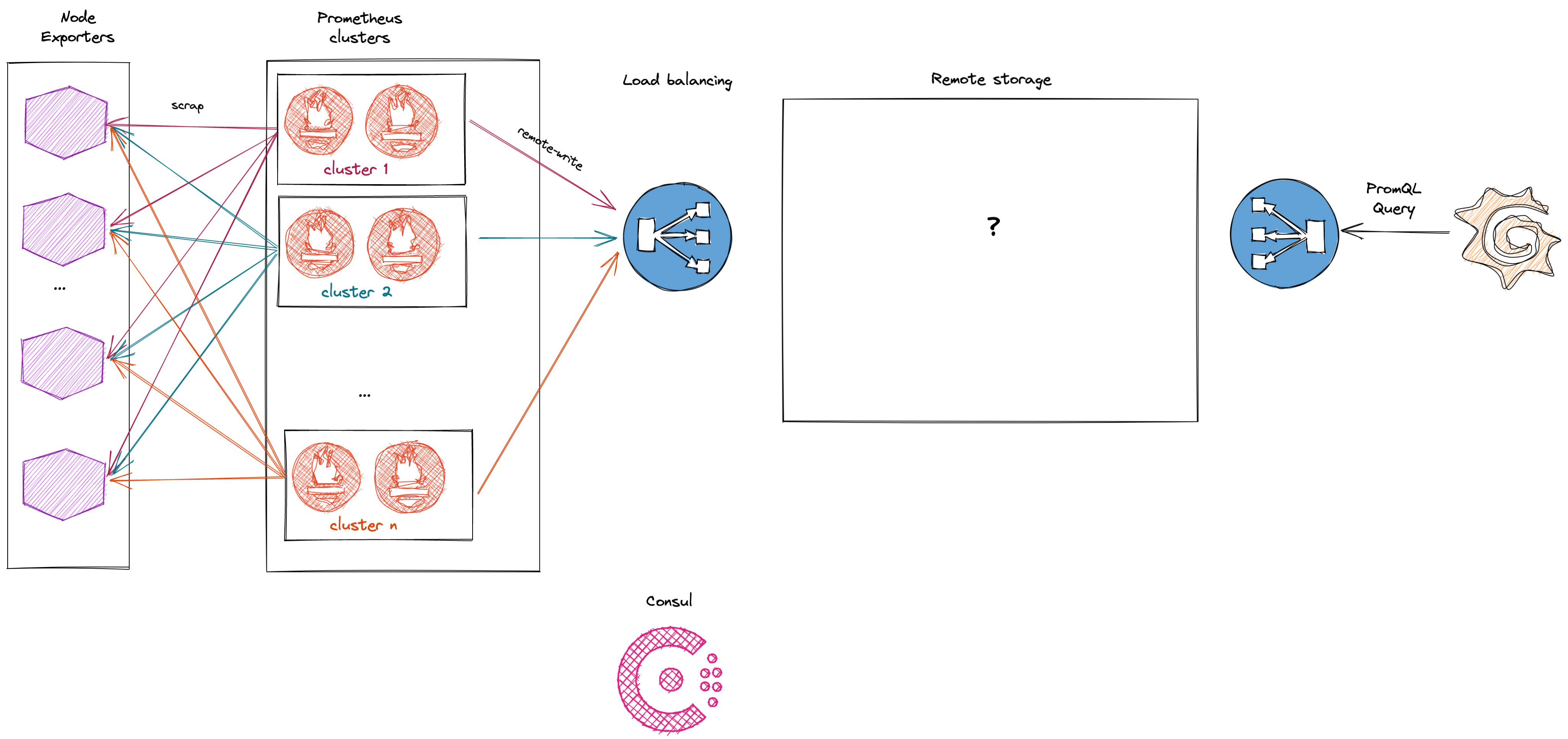

In our previous blog post we have explained how we have built our benchmarking infrastructure which was rather complex to be accurate.

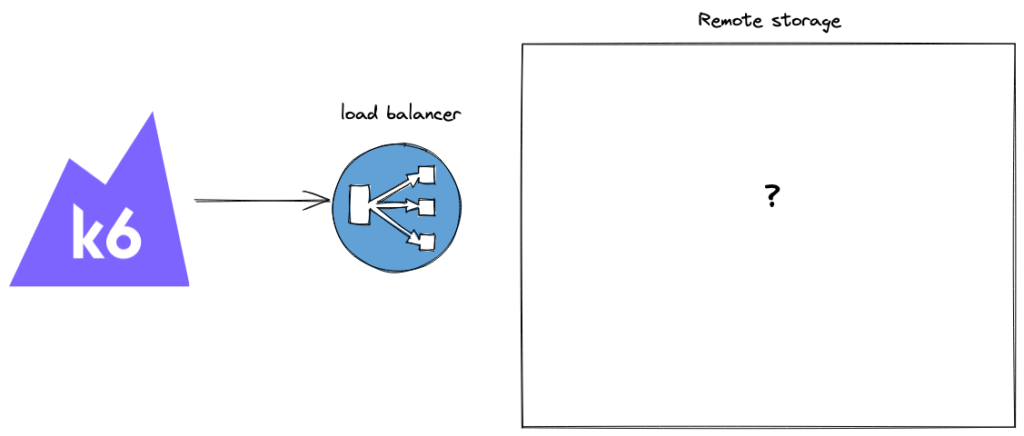

With k6 as benchmarking tool the infrastructure can be greatly simplified:

K6 is quite flexible and configurable. Its input is a load testing script, you can either write your own script or reuse an opensourced one. As the whole logic is in the load testing script it become easily reproducible which is exactly what we need.

To launch a benchmark you need two piece of infrastructure:

- Somewhere where you can run k6 which could be a c2-120 instance on our public cloud

- A remote storage to benchmark. for a quick start users are one helm apply away to start on k8s

For our use case we have chosen to reuse the load testing from Grafana which does exactly what we are looking for. All useful information to tune and assess your remote storage are outputed by k6.

✓ write worked

█ instant query high cardinality

✓ expected request status to equal 200

✓ has valid json body

✓ expected status field to equal 'success'

✓ expected data.resultType field to equal 'vector'

█ range query

✓ expected request status to equal 200

✓ has valid json body

✓ expected status field is 'success' to equal 'success'

✓ expected resultType is 'matrix' to equal 'matrix'

█ instant query low cardinality

✓ expected request status to equal 200

✓ has valid json body

✓ expected status field to equal 'success'

✓ expected data.resultType field to equal 'vector'

checks............................................................................: 100.00% ✓ 1454 ✗ 0

✓ { type:read }...................................................................: 0.00% ✓ 0 ✗ 0

✓ { type:write }..................................................................: 100.00% ✓ 6 ✗ 0

data_received.....................................................................: 1.0 MB 8.4 kB/s

data_sent.........................................................................: 277 kB 2.3 kB/s

group_duration....................................................................: avg=64.61ms min=39.94ms med=60.43ms max=230.05ms p(90)=80.39ms p(95)=107.93ms

http_req_blocked..................................................................: avg=4.65ms min=2µs med=6µs max=96.84ms p(90)=11µs p(95)=58.42ms

http_req_connecting...............................................................: avg=1.31ms min=0s med=0s max=21.87ms p(90)=0s p(95)=16.99ms

http_req_duration.................................................................: avg=53.7ms min=34.23ms med=52.71ms max=164.1ms p(90)=67.02ms p(95)=71.82ms

{ expected_response:true }......................................................: avg=53.7ms min=34.23ms med=52.71ms max=164.1ms p(90)=67.02ms p(95)=71.82ms

✓ { type:read }...................................................................: avg=53.8ms min=34.23ms med=52.76ms max=164.1ms p(90)=66.85ms p(95)=71.62ms

✓ { url:https://admin:security-matters@remote-storage.poc.ovh.net/api/v1/push }...: avg=0s min=0s med=0s max=0s p(90)=0s p(95)=0s

http_req_failed...................................................................: 0.00% ✓ 0 ✗ 368

http_req_receiving................................................................: avg=92.34µs min=32µs med=89µs max=301µs p(90)=125.3µs p(95)=150µs

http_req_sending..................................................................: avg=49.05µs min=12µs med=40µs max=566µs p(90)=68µs p(95)=94.59µs

http_req_tls_handshaking..........................................................: avg=3.11ms min=0s med=0s max=54.28ms p(90)=0s p(95)=39.39ms

http_req_waiting..................................................................: avg=53.56ms min=33.94ms med=52.56ms max=163.93ms p(90)=66.88ms p(95)=71.66ms

http_reqs.........................................................................: 368 3.064697/s

iteration_duration................................................................: avg=64.88ms min=40.34ms med=60.78ms max=230.27ms p(90)=80.87ms p(95)=108.47ms

iterations........................................................................: 368 3.064697/s

vus...............................................................................: 26 min=26 max=26

vus_max...........................................................................: 26 min=26 max=26

What a time saver? With k6 we have been able to efficiently assess all remote storage solutions. This is a significative improvement if we compare it to our previous benchmarking plan.

The next and final post will be about which remote storage we have chosen to be our internal solution.

Stay tuned.

In his position as the leader of a SRE team, Wilfried focuses on prioritizing sustainability, resilience, and industrialization to guarantee customer satisfaction. Additionally, Wilfried is dedicated to contributing to open-source initiatives.