At OVHcloud, we are constantly looking for ways to improve our operations and reduce our impact on the environment. This has been a defining part of the company since 1999 and is a key part of our organisational DNA and our commercial model.

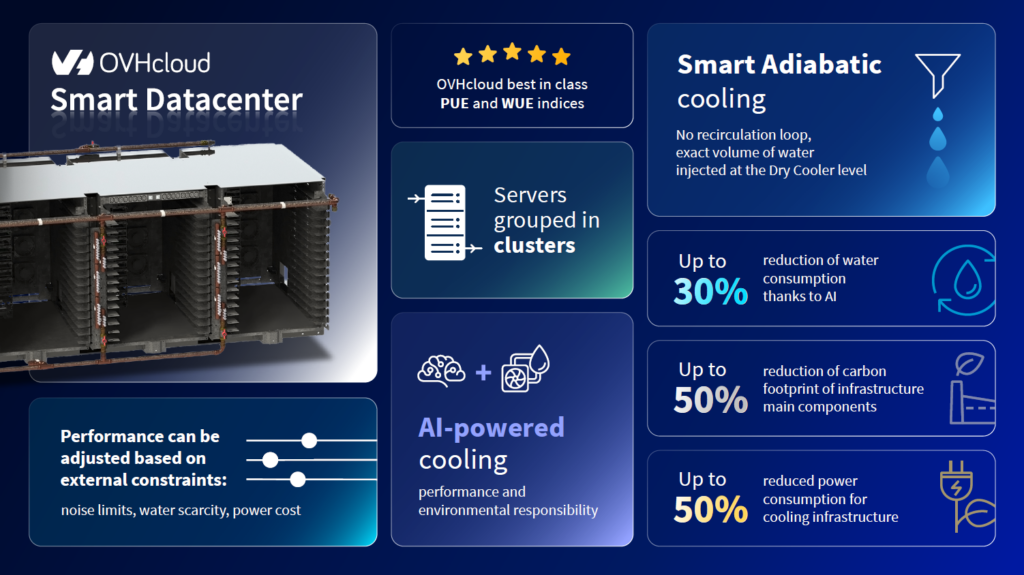

We are very proud to present the new Smart Datacenter cooling system, which significantly improves energy and water efficiency while delivering a significant reduction in carbon impact across the entire cooling chain, from manufacturing and transport to daily operations.

The system is a new way of building and deploying datacenter infrastructure, changing how we manage and monitor water supply and demand, using a combination of industrial design, IoT sensors and AI innovation, specifically in our smart racks, advanced cooling distribution units (CDUs) and intelligent dry coolers.

Smart Datacenter delivers a reduction in power consumption of up to 50% across the entire cooling loop, from server water blocks to dry coolers, and consumes 30% less water compared to OVHcloud’s earliest design, driving major sustainability benefits. The system also uses complex mathematical models capturing detailed rack-level and environmental data to optimize cooling performance in real time. Furthermore, all operational data is fed into a centralized data lake, enabling cutting-edge artificial intelligence to predict, adapt, and enhance system efficiency and reliability.

Let’s get into the detail.

The system has three main components:

- Smart Racks: These are designed with an innovative hydraulic “pull” architecture, where each rack autonomously draws exactly the water flow, pressure, and temperature it needs, dynamically adapting to server load and performance.

- Advanced Cooling Distribution Unit (CDU): This is a compact, next-generation primary loop unit that autonomously balances flow and pressure across all racks without manual intervention or any electrical communication. It uses only hydraulic signals (pressure, flow and temperature of water) to “understand” rack demands and continuously optimizes operation for lowest power consumption and extended pump lifespan.

- Intelligent Dry Cooler: This is operated seamlessly by the CDU, eliminating the need for separate control systems (“brains”) on both the dry cooler and the CDU. This unified control architecture ensures optimized, coordinated performance across the entire cooling infrastructure.

OVHcloud’s new Single-Circuit System (SCS) replaces the previous Dual-Circuit System cooling architecture (DCS), which consisted of a primary facility loop and a secondary in-rack loop separated by an in-rack Coolant Distribution Unit (CDU), installed inline directly after the rear door heat exchangers (RDHX), as shown in Figure 1. The CDU housed multiple pumps, several plate heat exchangers (PHEX), and a network of valves and sensors.

Figure 1. Dual-Circuit System cooling architecture (DCS) vs Single-Circuit system (SCS).

That previous design maintained turbulent flow through water blocks (WBs) using the in-rack CDU to regulate flow and temperature differences, ensuring performance despite OVHcloud’s ΔT of 20 K on the primary loop (far higher than the typical market value around 5 K).

Removing the in-rack CDU — replaced by a Pressure Independent Control Valve (PICV), a flow meter, and two temperature sensors on each rack — simplifies the system to a single closed-loop, where the flow rate through servers is dictated directly by the primary loop, adapting dynamically to rack load density. On the rack side, the system adapts the exact flow the rack requires by analyzing the water behavior and performing iterative, predictive thermal optimization considering IT components and the supplied water temperature and flow. This results in lower inlet water temperatures at the server level due to the elimination of the in-rack CDU’s approach temperature difference, and reduces electrical consumption, CAPEX, carbon footprint, and rack footprint.

To prevent laminar flow and maintain heat transfer efficiency at low flow rates, OVHcloud introduced a passive hydraulic innovation by arranging servers into clusters connected in series with servers inside each cluster connected in parallel, rather than all servers in parallel. This ensures higher water flow through individual servers even when the rack density is low. While this increases system pressure drops depending on cluster configuration, it results in better thermal performance and all servers receive water at temperatures equal to or lower than in the previous DCS design.

The racks operate on a novel hydraulic “pull” principle — where each rack draws exactly the hydraulic power it requires, rather than being pushed by the system. The CDU then dynamically adapts the overall hydraulic performance of the primary loop, balancing flow and pressure in real time to match the actual demand of the entire data center.

A key breakthrough is the CDU’s communication-free operation: it requires no cables, radio waves, or other electronic communication with racks. Instead, it analyzes hydraulic signals — pressure, flow, and temperature fluctuations within the water itself — to understand each rack’s cooling needs and adapt accordingly. This eliminates complex telemetry infrastructure, reduces operational risks, and enhances system reliability. To ensure water quality and system longevity, water supplied to the data center is filtered at 25 microns, and multiple sophisticated high-precision sensors continuously monitors water quality in real time.

The CDU is 50% smaller than the previous generation and manages the entire thermal path — from chip-level water blocks, through the racks and CDU, to the dry coolers.

The newly designed dry cooler is also 50% smaller than the previous model and features one of the lowest density footprints worldwide. Thanks to years of thermal studies on heat exchangers by the OVHcloud R&D team, it has 50% fewer fans, resulting in very low energy consumption, while also reducing noise. Its compact size means that we can also transport more units in the same truck! This design achieves a 30% reduction in water consumption compared to OVHcloud’s earliest dry cooler design. A key innovation in the dry cooler is its advanced adiabatic cooling pads system, which cools incoming hot air before it passes through the heat exchangers. This high-precision water injection system is the first of its kind, and adjusts water application based on multiple sensors and extensive iterative calculations, including data center load, ambient temperature, and humidity levels.

Unlike traditional adiabatic systems, the pads’ system does not use a conventional recirculation loop. Instead, water is injected when needed onto the pads via a simple setup consisting of a solenoid valve and a flow meter, eliminating complex hydraulics such as pumps, filters, storage tanks, level sensors, and conductivity sensors. The system maintains water quality and physical/chemical properties through careful design, drastically simplifying operation and reducing maintenance needs.

The CDU continuously analyzes data from up to 36 sensors distributed across the CDU itself and the associated dry cooler. It also collects operational data from solenoid valves, pumps, and dry cooler fans across the infrastructure loop. All components are monitored and managed by the system’s central intelligence—the CDU’s control panel—providing a comprehensive understanding of the entire system’s behavior, from the data center interior to the external ambient environment, ensuring real-time performance oversight and precise thermal regulation.

Through this iterative and precise control of water injection, the system optimizes cooling performance and Water Usage Effectiveness (WUE), ensuring minimal water consumption without sacrificing thermal effectiveness.

Advanced System Analytics, Learning & AI Integration

The entire system is designed to continuously analyze the thermal, hydraulic, and aerodynamic behaviors of the various fluids along the cooling path. It uses daily operational data to learn and adapt its performance dynamically, optimizing cooling efficiency and reliability over time.

The CDU’s brain—the control panel—aggregates data from 36 sensors distributed across the CDU and dry cooler, as well as operational data from solenoid valves, pumps, and dry cooler fans within the infrastructure loop. It also collects critical rack-level information, including flow rates, temperatures, and IPMI data that reflect IT equipment behavior and performance. All this operational data is pushed to a centralized data lake for parallel analysis, which forms the foundation for the next step: integrating cutting-edge artificial intelligence (AI). This AI will leverage the continuously gathered data and learning processes to enhance predictive capabilities, optimize future operating points, and enable fully autonomous decision-making.

This combination of real-time learning and AI-powered analytics will provide advanced diagnostics, predictive maintenance, and proactive management — maximizing uptime, reducing costs, and driving ever-greater sustainability.

Iterative Control System Innovation

The iterative control system manages all aspects in real time, hands-free, continuously learning from sensor data and operational feedback. It applies algorithms to the pump speed on the CDU, the fans on the dry cooler and the solenoid valve controlling water injection on the adiabatic pads.

On the rack side, the system uses a PICV valve, flow meter, and two temperature sensors to adapt the exact hydraulic flow needed by each rack, considering IT load and incoming water conditions, iteratively optimizing thermal performance and energy efficiency.

On the CDU, the system analyzes combined hydraulic signals from all racks alongside ambient data center conditions, dynamically balancing flow and pressure across the entire data center infrastructure without human intervention.

Furthermore, OVHcloud’s cooling system integrates intelligent communication between cooling line-ups to enhance failure detection and simplify maintenance. This is achieved through embedded freeze-gaud and resilience-switch mechanisms that ensure continuous operation and system resilience. The freeze-gaud system is designed to protect the dry coolers in sub-zero ambient conditions by keeping water circulating through their heat exchangers. If the overall loop flow drops below a predefined threshold, the system automatically opens a normally closed bypass valve to maintain circulation—preventing freezing despite the use of pure water (without glycol) as the cooling medium. The resilience-switch system maintains redundancy by hydraulically linking multiple cooling lines. In the event of failure or overload on one line, normally open solenoid valves isolate the affected line, while bypass valves on neighboring lines open to redistribute water flow and maintain cooling performance. This dynamic and autonomous valve management ensures uninterrupted service and rapid fault response.

Drawing inspiration from autonomous control methodologies in leading-edge industries, the system predicts future behavior based on iterative calculations, dynamically adapting pump speed, fans speed and solenoid valves openings to converge rapidly on optimal operating points. It also adjusts performance based on external constraints such as noise limits, water availability, or energy costs — for example, consuming more energy to save water in water-stressed regions or balancing noise restrictions in urban deployments.

This unique, self-optimizing end-to-end control system maximizes energy efficiency, sustainability, and operational simplicity, extending pump life cycles and ensuring the most environmentally responsible data center cooling solution available today.

This vertically integrated, autonomous system — including smart racks, the advanced CDU, and the intelligent dry cooler — represents a world-first in end-to-end, intelligent, sustainable, communication-free, and data-driven data center cooling.

Why is this important?

This innovation is critical because it marks a decisive step toward radically more sustainable, efficient, and autonomous data center cooling — addressing the growing demands of digital infrastructure while reducing its environmental footprint.

By using fewer, smaller components, we are saving power, cutting transport costs and reducing carbon impact. Using fewer fans on the dry cooler means up to 50% lower energy consumption on the cooling cycle – and the new pad system means 30% lower water consumption in the cooling system. The system is fully autonomous, avoiding human error. A temperature gradient of 20K on the primary loop – four times higher than the industry average – means that flow rates can be lower and water efficiency is higher. The system doesn’t rely on Wi-Fi or cabling, and the predictive control constantly adapts to external conditions or situational goals, feeding into a data lake to help continuously optimize performance.

Today’s world is built on technology, and datacenters are a key part of that technology, but there is a pressing need to ensure we can maintain human progress without incurring a significant carbon footprint. Power and water efficiency is a key part of this equation in the datacenter industry, and our innovation in the Smart Datacenter continues our trajectory of supporting today’s needs without compromising the world of tomorrow.