A growing number of companies are using artificial intelligence on a daily basis — and dealing with the back-end architecture can reveal some unexpected challenges.

Whether the machine learning workload involves fraud detection, forecasts, chatbots, computer vision or NLP, it will need frequent access to computing power for training and fine-tuning.

GPUs have proven to be a game-changer for deep learning. If you’re wondering why, you can find out more by reading our blog post about GPU architectures. A few years ago, manufacturers such as NVIDIA began to develop specific ranges for cloud datacentres. You may be familiar with the NVIDIA TITAN RTX for gaming — and in our datacentres, we use NVIDIA A100, V100, Tesla and DGX GPUs for enterprise-grade workloads.

In short, GPUs are perfect for tasks that can be solved or improved by AI, and require a lot of processing power.

They offer optimal compute, and are widely used in deep learning. A growing number of companies are using AI, and GPUs seem to be the best choice for them.

However, when dealing with pools of GPUs, the back-end architecture can be really tricky.

So how do we use them to benefit a company with minimal hassle and headaches? On-premise or in the cloud?

These are good questions that I’m keen to discuss here, from both a business and technical perspective.

Dealing with GPU pools… The struggle is real.

For anyone who has had to deploy and manage more than 1 GPU for a data-AI team, I’m sure this topic will bring tears to your eyes, and make your voice tremble. Yes, it is indeed complicated.

I can talk about it on our blog, because our team of data scientists here at OVHcloud had to deal with the exact same annoying issues. Thankfully, we solved all of them — stay tuned!

GPU sharing is hard. Even if one GPU is better than none, in most cases it will not be sufficient, and a GPU pool will be far more effective. From a tech perspective, dealing with a GPU pool — or worse, allowing your team to use this pool simultaneously — is very tricky. The market is really mature for CPU sharing (via hypervisors), but by design, a GPU has to be attached to a VM or container. This means that quite often, it needs to be “booked” for a specific workload. To get around this issue, you’ll need to provide a scale-out with orchestration, so that you can dynamically assign GPUs to jobs over time. Whenever you tell yourself “I want to launch this task with 4 GPUs for 2 days“, you should simply be able to ask, and the back-end should work its magic for you.

Setting up and maintaining an architecture is time-consuming. So you’ve deployed servers with GPU, updated and upgraded your Linux distros, installed your main AI packages, CUDA drivers, and now you want to move on to something else. But wait — a new TensorFlow version has been released, and you also have a security patch to apply. What you initially thought to be a single task is now taking up 4-5 hours of your time per week.

Diagnosing is quite complex. If, for whatever reason, something isn’t working as it should — good luck. You barely know who is doing what, and you can’t track jobs or usage unless you connect to the platform yourself and set up monitoring tools. Remember to grab your snorkel set, because you’ll need to deep-dive.

Bottlenecks are almost inevitable. Imagine setting up a pool of GPUs based on your current AI project workloads. Your infrastructure is not really designed to scale automatically, and as soon as the AI workloads increase, your jobs have to be scheduled while the GPU fleet is being updated constantly. A backlog starts to accumulate, and a bottleneck is created as a result.

Providing tools for teams to work collaboratively on code is mandatory. Usually, your team will need to share their data experimentations — and the best way to do this for now is with JupyterLab Notebooks (we love them) or VSCode. But you’ll need to keep in mind that this is more software to set up and maintain.

Securing data access is essential. The required data must be easily accessible, and sensitive data must be covered by security guarantees.

Cost control is difficult. Even worse, for one reason or another (who said holidays?), you might need to stop almost all your GPU servers for a week or two — but to do this, you would need to wait for any ongoing jobs to be completed.

All jokes aside, while we may be passionate about tech and hardware, we have other things to do. Data engineers cannot achieve their full potential and talent in maintenance-based or billing-based tasks.

Kubeflow to the rescue?

Kubernetes 1.0 was launched 5 years ago. Whatever your opinion is on it, in five years they have become the de facto standard for container orchestration in enterprise environments.

Data scientists use containers for portability, agility, and community — but Kubernetes was made to orchestrate services, not data experimentations.

Kubernetes alone is not tailored for a data team. It presents too much complexity, with the sole benefit of solving the orchestration issue.

We need something that not only improves orchestration, but also code contribution, tests and deployments.

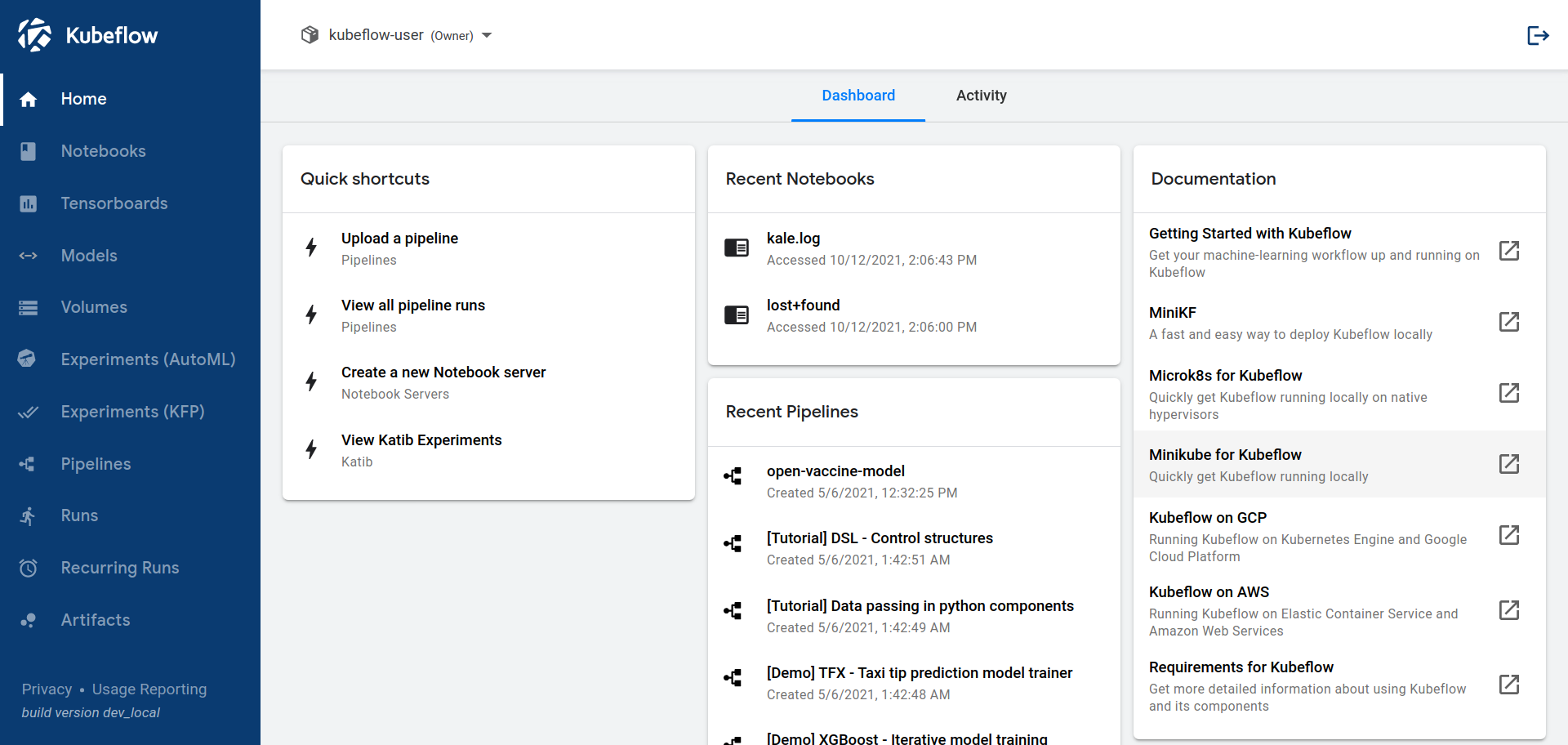

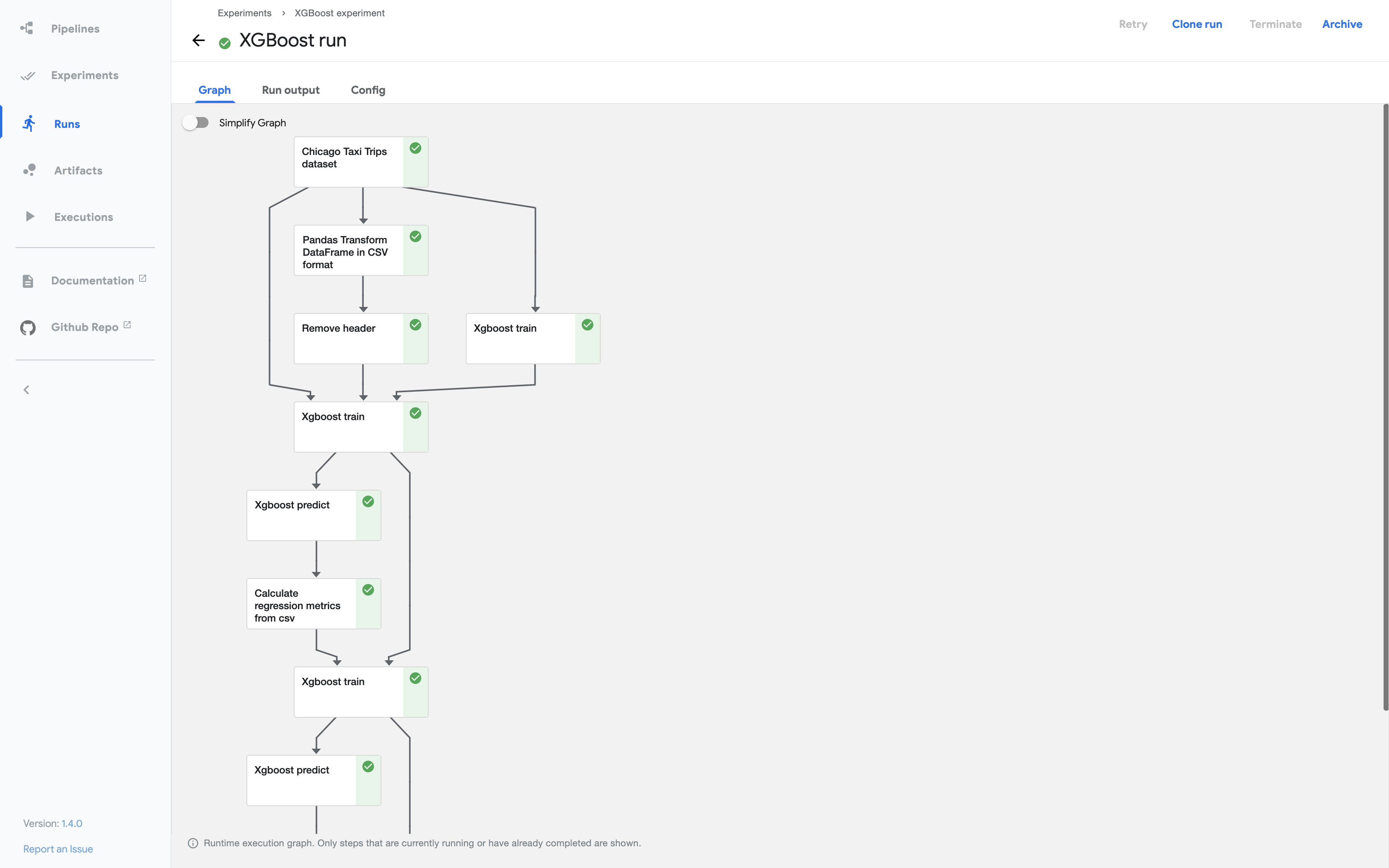

Luckily, Kubeflow appeared 2 years ago, and was open-sourced by Google at the time. Its main promise is to simplify complex ML workflows, for example data processing => data labeling => training => serving, and complete it with notebooks.

I do really love the promise, and the way they simplify ML pipelines. Kubeflow can be run over K8s clusters on-premise or in the cloud, and can also be set up on a single VM or even on a workstation (Linux/Mac/Windows).

Students can easily have their own ML environment. However, for the most advanced uses, a workstation or a single VM might be out of the question, and you would need a K8s cluster with Kubeflow installed on top of that. You’ll have a nice UI for starting notebooks and creating ML pipelines (processing/training/inference), but still zero GPU support by default.

Your GPU support will depend on your setup. It may differ if you host it on GCP, AWS, Azure, OVHcloud, on-premise, MicroK8s, or anything else.

For example, on AWS EKS, you need to declare GPU pools in your Kubeflow manifest:

# Official doc: https://www.kubeflow.org/docs/aws/customizing-aws/

# NodeGroup holds all configuration attributes that are specific to a node group

# You can have several node groups in your cluster.

nodeGroups:

- name: eks-gpu

instanceType: p2.xlarge

availabilityZones: ["us-west-2b"]

desiredCapacity: 2

minSize: 0

maxSize: 2

volumeSize: 30

ssh:

allow: true

publicKeyPath: '~/.ssh/id_rsa.pub'On GCP GKE, you will need to run this command to export a GPU pool:

# Official doc: https://www.kubeflow.org/docs/gke/customizing-gke/#common-customizations

export GPU_POOL_NAME=<name of the new GPU pool>

gcloud container node-pools create ${GPU_POOL_NAME} \

--accelerator type=nvidia-tesla-k80,count=1 \

--zone us-central1-a --cluster ${KF_NAME} \

--num-nodes=1 --machine-type=n1-standard-4 --min-nodes=0 --max-nodes=5 --enable-autoscalingYou will then need to install NVIDIA drivers on all the GPU nodes. NVIDIA maintains a deamonset, which enables you to install them easily:

# Official doc: https://www.kubeflow.org/docs/gke/customizing-gke/#common-customizations

kubectl apply -f https://raw.githubusercontent.com/GoogleCloudPlatform/container-engine-accelerators/master/nvidia-driver-installer/cos/daemonset-preloaded.yamlOnce you have done this, you will be able to create GPU pools (don’t forget to check your quotas before — with a basic account, you are restricted by default, and you will need to contact their support).

Okay, but do things get easier from here?

As we say in France, especially in Normandy, yes but no.

Yes, Kubeflow does resolve some of the challenges we’ve mentioned — but some of the biggest challenges are yet to come, and they will take up a lot of your daily routine. Many manual operations will still require you to dig into specific K8s documentation, or guides published by cloud providers.

Below is a summary of Kubeflow vs GPU pool challenges.

| Challenges | Status |

|---|---|

| GPU pool with sharing option | YES but will require manual configuration (declaration in manifest, driver installation, etc.). |

| Collaborative tools | YES definitely. Notebooks are provided via Kubeflow. |

| Infrastructure maintenance | Definitely NO. Now you have a Kubeflow cluster to maintain and operate. |

| Infrastructure diagnosis | YES BUT NO. Activity Dashboard and reporting tools based on SpartaKus, Logs, etc. But provided to the data engineers, not data scientists themselves. They may come back to you. |

| Infrastructure agility/flexibility | TRICKY. It will depend on your hosting implementation. If it’s on-premise, definitely no. You’ll need to buy hardware components (an NVIDIA V100 costs approximately $10K without chassis, electricity usage, etc.) Some cloud providers can provide “auto-scaling GPU pools” from 0 to n, which is nice. |

| Secured data access | TRICKY. It will depend on how you locate your data, and the technology used. It’s not a ready-to-use solution. |

| Cost control | TRICKY. Again, it will depend on your hosting implementation. It’s not easy, since you need to take care of the infrastructure. Some hidden costs can appear, too (network traffic, monitoring, etc.). |

Forget infrastructure, welcome to GPU platforms made for AI

You can now find various third-party solutions on the market that go one step further. Instead of dealing with the architecture and the Kubernetes cluster, what if you simply focused on your machine learning or deep learning code?

There are well-known solutions such as Paperspace Gradient — or smaller ones, like Run:AI — and we’re pleased to offer another option on the market: AI Training. We’re using this post as a self-promotion opportunity (it’s our blog after all), but the logic remains the same for competitors.

What are the concepts behind it?

No infrastructure to manage

You don’t need to set up and manage a K8s cluster, or a Kubeflow cluster.

You don’t need to declare GPU pools in your manifest.

You don’t need to install NVIDIA drivers on the nodes.

With GPU Platforms like OVHcloud AI Training, your neural network training is as simple as this:

# Upload data directly to Object Storage

ovhai data upload myBucket@GRA train.zip

# Launch a job with 4 GPUs on a Pytorch environment, with Object Storage bucket directly linked to it

ovhai job run \

--gpu 4 \

--volume myBucket@GRA:/data:RW \

ovhcom/ai-training-pytorch:1.6.0This line of code will provide you with a JupyterLab Notebook directly plugged to a pool of 4x NVIDIA GPUs, with the Pytorch environment installed. This is all you need to do, and the entire process takes around 15 seconds.

Parallel computing — a great advantage

One of the most significant benefits is that since the infrastructure is not on your premises, you can count on the provider to scale it.

So you can run dozens of jobs simultaneously. A classic use case is to fine-tune all of your models once a week or once a month, with 1 line of bash script:

# Start a basic loop

for model in my_models_listing

do

# Launch a job with 4 GPUs on a Pytorch environment, with Object Storage bucket directly linked to it

echo "starting training of $model"

ovhai job run \

--gpu 3 \

--volume myBucket@GRA:/data:RW \

my_docker_repository/$model

doneIf you have 10 models, it will launch 10x 3 GPUs in few seconds, and it will stop them once the job is complete, from sequential to parallel work.

Collaboration out of the box

All of these platforms natively include notebooks, directly plugged to GPU power. With OVHcloud AI Training, we also provide pre-installed environments for TensorFlow, Hugging Face, Pytorch, MXnet, Fast.AI — and others will be added to this list soon.

Data set access made easy

I haven’t tested all the GPU platforms on the market, but usually they provide some useful ways to access data. We aim to provide the best work environment for data science teams, so we are also offering an easy way for them to access their data — by enabling them to attach object storage containers during the job launch.

Cost control for users

Third-party GPU platforms quite often provide clear pricing. This is the case for Paperspace, but not for Run:AI (I was unable to find their price list). This is also the case for OVHcloud AI Training.

- GPU power: You pay £1.58/hour/NVIDIA V100s GPU

- Storage: Standard price of OVHcloud Object Storage (compliant with AWS S3 protocol)

- Notebooks: Included

- Observability tools: Logs and metrics included

- Subscription: No, it’s pay-as-you-go, per minute

And there we go — cost and budget estimation is now simple. Try it out for yourself!

Mission complete?

Below is a summary addressing the major challenges to resolve when dealing with GPU pool sharing. It’s a big yes!

| Challenges | Status |

|---|---|

| GPU pool with sharing option | YES definitely. In fact, even many GPU pools in parallel, if you want to. |

| Collaborative tools | YES definitely. Notebooks are always provided, as far as I know. |

| Infrastructure maintenance | YES definitely. Infrastructure is managed by the provider. You will need need to connect via SSH to debug. |

| Infrastructure diagnosis | YES. Logs and metrics provided on our side, at least. |

| Infrastructure agility/flexibility | YES definitely. Scale up or down one or more GPU pools, use them for 10 minutes or a full month, etc. |

| Secured data access | Depends on the solution you choose, but usually it’s a YES via simplified object storage access. |

| Cost control | Depends on the solution you choose, but usually is a YES with packaged prices and zero investments to make (zero CAPEX). |

Conclusion

If we go back to the main challenges faced by a company that requires shared GPU pools, we can say without a doubt that Kubernetes is a market-standard for AI pipeline orchestration.

An on-premise K8s cluster with Kubeflow is really interesting if the data cannot be processed into the cloud (e.g. banking, hospitals, any kind of sensitive data) or if your team has flat (and lower-level) GPU requirements. You can invest in a few GPUs and manage the fleet yourself with software on top. But if you need more power, very soon the cloud will become the only viable option. Hardware investments, hardware obsolescence, electricity usage and scaling will give you some headaches.

Then, depending on the situation, Kubeflow in the cloud might be really useful. It delivers powerful pipeline features, notebooks, and enables users to manage virtual GPU pools.

But if you want to avoid infrastructure tasks, control your spending, and focus on your added value and code, you might consider GPU platforms as your first choice.

However, there is no such thing as magic — and without knowing exactly what you want, even the best platform won’t be able to meet your needs. Yet some start-ups, not listed here, can offer a combination of platforms and expertise to help you in your project, infrastructures and use cases.

Thank you for reading, and don’t forget that we also offer inference at scale with ML Serving. This is the next logical step after training.

Want to find out more?

- Solution page: https://www.ovhcloud.com/en-gb/public-cloud/ai-training/

- Public documentation: https://docs.ovh.com/gb/en/ai-training/

- Community: community.ovh.com/en/

Product Manager for databases / big data / AI