The need to share data across different workloads is a constant in IT, but the preferred way of sharing this data has evolved during the last few years. Where most developers would have used file systems (NFS, Samba…) ten years ago, the choice has switched naturally to Object Storage as its features and abstractions were added.

In parallel with this evolution, more and more uses cases involving publicly sharing data have arisen. The amount of data has exploded, in almost all applications, and IT people don’t want to manage storage anymore (maintenance, failure, scalling…).

As now, all the major languages have their object storage libraries (at least for S3 APIs), and tones of applications integrate by default the connectors to those services. And as many IT people, I switched my reflexes to think Object Storage first, and handle some exceptions where network file systems are a better solution.

When applications need storage performances…

During many years, performances were not the main focus. The goal was to take advantage of unlimited space and public access to store images, documents, code…

Nowadays, part of the applications need more speed because the objects are bigger and plentiful. That’s the case for Big Data use cases and IA scenarios but also for the majority of modern applications.

- Those who work on big data analysis know that you can wait hours before getting your result in many cases. Working on data to analyze users behavior, system deviation, cost optimization requires powered compute resources but also high performance storage. Big Data clusters needs to access the date quickly to provide a result in time.

- Training IA models and fine tuning the predictions requires a lot of data analysis also that takes hours and slow down your progress if your storage solution is the bottleneck.

- Serving media that are bigger and bigger (4K video, HD pictures on smartphones, …) on high speed network (fiber, 5G) challenge the previous solutions.

OVHcloud Standard Object Storage hosts petabytes of data across the world on a strong and resilient infrastructure but our classical offer was not design to address those use cases. The performances of our classical object storage where not enough in those situations.

A new Object Storage service for demanding applications

So what is high performances for an object storage service? What are we speaking about?

There are mainly two things to consider when you need speed on your objects. The first one is the API. This is the first component that answers the requests you’ll send to the cluster, so the simplest API call should react as a flash. That’s the assurance that all the engine and automation tools on the cluster are optimized and not consuming precious milliseconds. The second one is the bandwidth. You’ll expect that now your object is identified, it should be delivered to you as soon as possible using the maximum capacity of disks and network.

So we are excited to provide OVHcloud High Performance Object Storage that fit those requirements in the upcoming weeks. The general availability should be the 21rst February at first in Strasbourg, then mid-March in Gravelines.

Some numbers

We’ll make some tests and compare that new service with the existing service at OVHcloud and the market leader on Object Storage, AWS S3. We’ll do those tests from OVHcloud network on a Public Cloud instance B2-120 which have a 10G public interface. This instance is located in Gravelines (north of France). The three tested services are located in central Europe, Strasbourg (France) for OVHcloud ones and Frankfurt (Germany, closed to Strasbourg) for AWS. The distance between the instance and the cluster are almost the same.

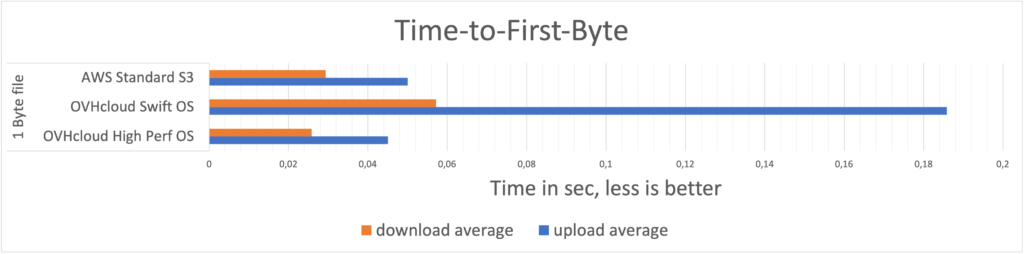

Time-to-First-Byte test

So what is the API efficiency on that new service? Here we want to compare the Time-to-First-Byte which give us an idea how the cluster manage to process the request, identify the object, check the right to access it and return it. So we’ll send tones of 1 Byte objects and measure the time needed to access it.

To be fare in that test, we need to take in consideration that we’ll get out to the OVHcloud network to reach the AWS cluster. Here are the ping we got :

OVHcloud instance to OVHcloud Object Storage

$ ping s3.sbg.perf.cloud.ovh.net

PING s3.sbg.perf.cloud.ovh.net (141.95.161.68) 56(84) bytes of data.

64 bytes from 141.95.161.68 (141.95.161.68): icmp_seq=1 ttl=53 time=10.2 msOVHcloud instance to AWS Object Storage

$ ping s3.eu-central-1.amazonaws.com

PING s3.eu-central-1.amazonaws.com (52.219.169.77) 56(84) bytes of data.

64 bytes from s3.eu-central-1.amazonaws.com (52.219.169.77): icmp_seq=1 ttl=45 time=11.0 msSo we have almost the same latency, let’s go for the Time-to-First-Byte for each services.

OVHcloud High Perf looks a little bit better, not so far away but there is a small advantage for OVHcloud.

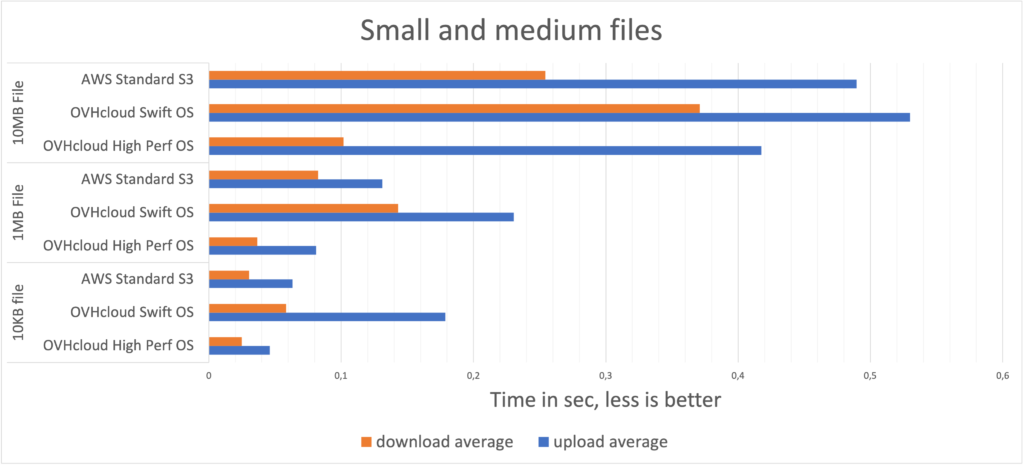

Small and medium files test

Now we’ll test not only the API but a normal object usage looking on files from 10KB to 10MB which represent plenty of usages, especially when it comes to serve statics on the web. That could represent code, images, sound…

As you can see, we leaved the field where the latency advantage where on OVHcloud side and we enter in the real performances using the whole chain including writing and reading on disks. Here, OVHcloud High Perf takes clearly the lead on 1MB files with around 55% faster than AWS S3 on download tests.

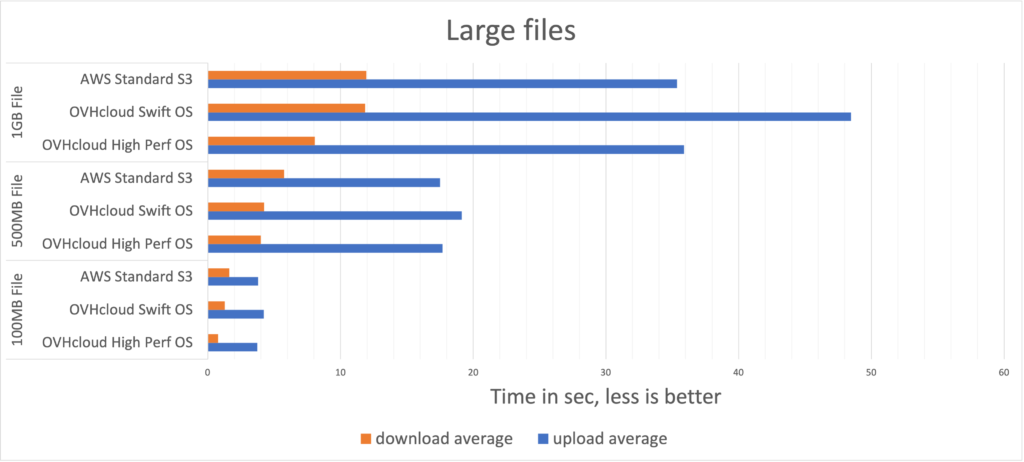

Large files test

When we move to 100MB files and more, we enter in a different space where the bandwidth is the main part which impact the global result. Here the data could be of course videos but also specific application files or large datasets.

Here, the upload performances are almost the same for AWS and OVHcloud but we still have a real difference on the download test with around 30% advantage in favor of OVHcloud High Perf.

Some take away about this new offer

Regarding the pure performances, OVHcloud High Perf Object Storage is a great challenger for AWS S3. The API is as fast as our competitor. We can notice a real advantage for OVHcloud on medium files (1MB to 100MB) where we go over 50% (1MB) faster than AWS on download and around 40% on upload (1MB).

Jean-Daniel used to be a technical guy working as system engineer on Linux and OpenStack. Since few years, he changed hats to develop marketing skills using the previous knowledge to serve the communication.