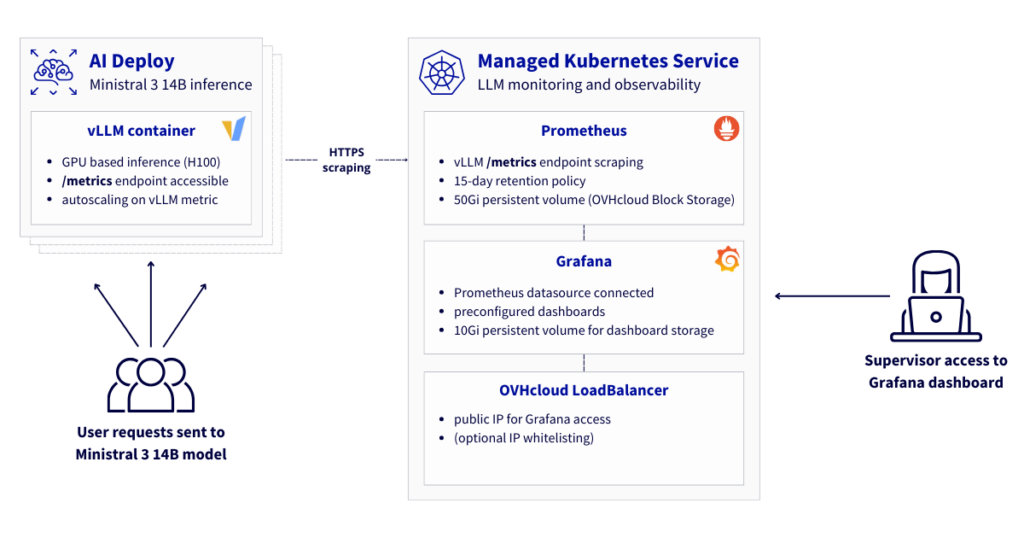

Reference Architecture: Custom metric autoscaling for LLM inference with vLLM on OVHcloud AI Deploy and observability using MKS

Take your LLM (Large Language Model) deployment to production level with comprehensive custom autoscaling configuration and advanced vLLM metrics observability. This reference architecture describes a comprehensive solution for deploying, autoscaling and monitoring vLLM-based LLM workloads on OVHcloud infrastructure. It combinesAI Deploy, used for model serving with custom metric autoscaling, and Managed Kubernetes Service (MKS), which […]