My data is an asset. Let’s share the best practices to protect your data.

If you feel that security is a constraint, it’s time to think again! In this blog post, I will share with you 5 simple rules that can be easily implemented to secure your back-ups without headache thanks to the “Objects Storage Standard-S3 API” class of storage.

I am DevOps or DevSecOps, I am developing on my platform and want to stay concentrated on my business where I have added value. That is why I am managing by code the deployment and scale of my infrastructures. I am delegating the management of my infrastructure to my cloud provider.

While developing my business, the volume of my data grows exponentially, so my data has value too!

As my business grows, I collect more data and keep a historical set year after year. I am even deploying in new locations around the world!

All this data (applications, user data, logs, media, analytics, reporting) are stored and backed up in object storage for flexibility, metadata search, and easy scale. My data represents a great asset in my hand. Data drives my business and I want to protect it.

Data is an important asset that requires good governance!

Please don’t think, “cool I have copied my data to a secondary bucket, my backup is complete, I am safe.” Nope, this is not OK!

What S3 Object Storage doesn’t protect you from is yourself. Let’s take a look together at the 3 types of risks we need to protect ourselves from.

(1) The number one factor for data loss is human error, accidental deletion, or the overwriting of an object with garbage data. This is a scenario that you want to avoid.

(2) The second category relates to unpredictable events: software issues, hardware issues (drive failure), datacenter downtime, or natural/manmade disaster.

(3) The third category is the stuff that causes security experts to lose sleep at night-malicious actions: malware, ransomware & viruses, acts of sabotage, DDoS…

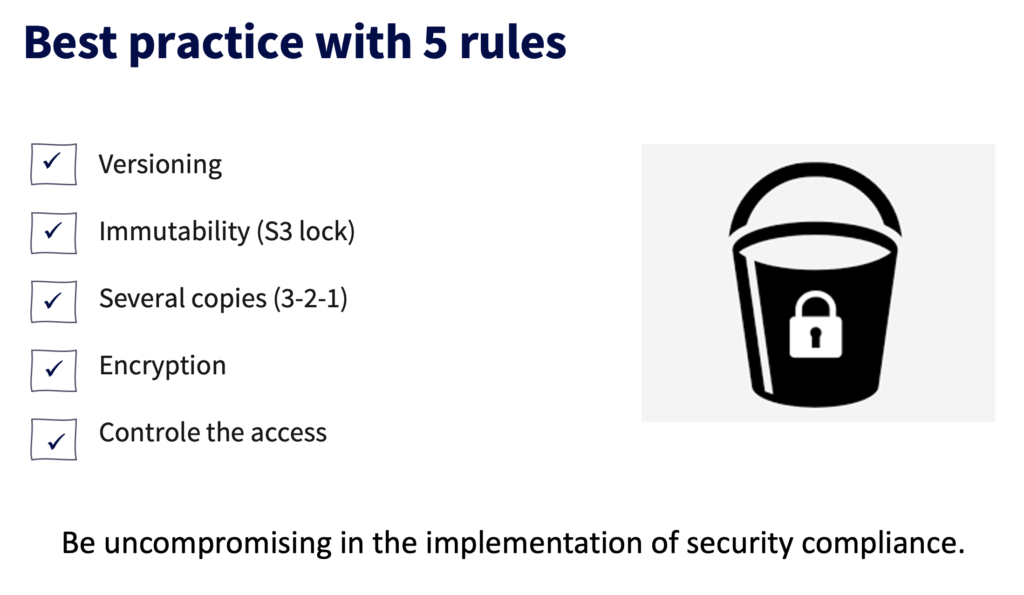

Security is important and non-negotiable, let’s take a look at 5 easy rules to protect against these risks.

…and continue to work in all serenity!

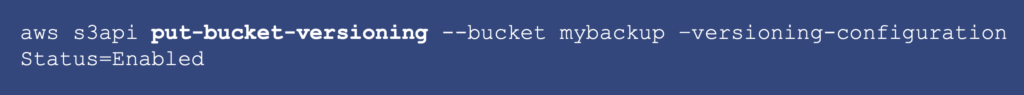

Rule n° 1 – versioning

Versioning helps to protect against accidental overwriting. You can reverse a version after accidental deletion or retrieve a specific version in the event of data corruption.

Rule n° 2 – immutability

When your primary storage systems must be open and available, your backup data should be isolated and immutable.

Implement the Write Once, Read Many (WORM) model using S3 object lock API.

You can define different parameters according to your needs, business, and type of data:

- retention periods

- legal mode

- governance mode

- compliance mode

This rule helps your organization to respect compliance. To keep logs for a legally limited period, the “compliance mode” will help you set the duration needed. It’s quite handy because logs are generated every second so it can be difficult to keep track. With compliant mode, you don’t need to worry about this anymore, you can set a period of 1, 3, or 5 years and the logs will remain protected throughout the designated period.

read more: https://docs.ovh.com/ie/en/storage/object-storage/s3/managing-object-lock/

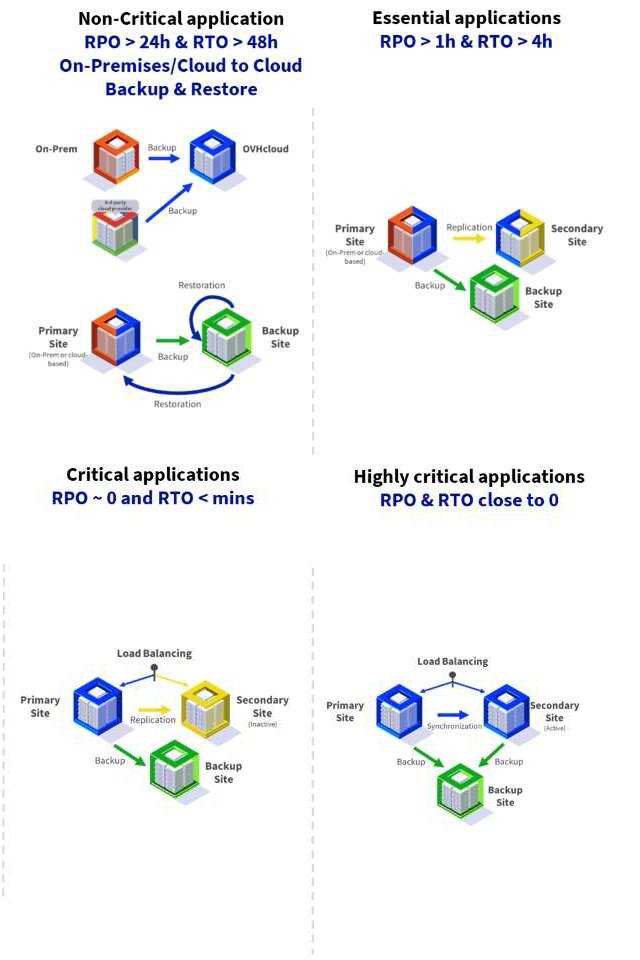

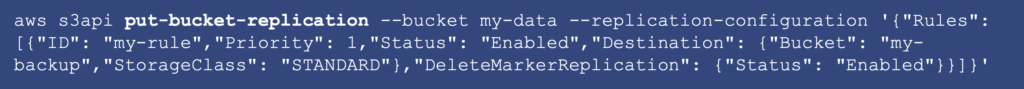

Rules n°3 – data replication off-site

To be protected against hardware failure issues or geographical events, follow the well-known model : 3+2+1

Before setting your copies of data, you need to evaluate the RPO and RTO of your target

- RPO (real point objective) = in case of geographical failure, what is – in time – the most recent snapshot of your data that is acceptable for you to restore your data while losing the minimum amount of data

- RTO (real time objective) = in case of geographical failure, what is the acceptable time to recover your data

Of course, everybody wants a 0-second recovery, but is it necessary?

Such a recovery plan requires costly resources to maintain. Good advice is to sort your data by category of criticality and fine-tune this plan by categories and put into place backup retention policies

| Type | Back up policies |

|---|---|

| Nonbusiness critical | Weekly |

| Business critical | Every day for 1 month then monthly during 1 year |

| Archive | > 1 year |

read more : https://docs.ovh.com/ie/en/storage/object-storage/s3/rclone/

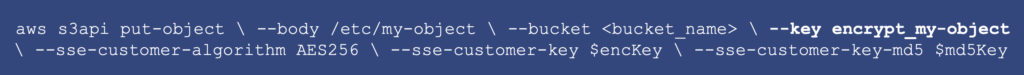

Rules n°4 – encryption

When your data is not used you can cipher it with your own key. We use a feature based on the AES-256 protocol.

Encrypt your data: using your own keys and encryption based on AES-256

Note that the data in transit is encrypted thanks to the TLS protocol.

read more : https://docs.ovh.com/ie/en/storage/object-storage/s3/encrypt-your-objects-with-sse-c/

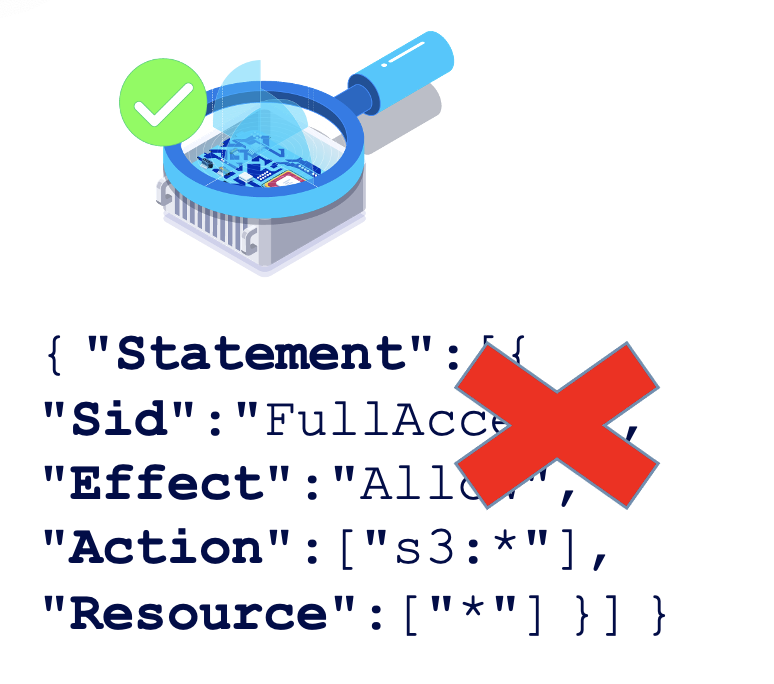

Rules n°5 – user policies

Grant only the permissions that are required to perform a task using

- User policy

- Bucket policy (soon)

- Bucket ACL

Extract your S3 policies every month and check them; It will not take too much time and can be automized. Verify that you know all users and that the rights are adapted to each profile. Never let a wildcard * provide access to all to a sensible bucket/object.

read more: https://docs.ovh.com/ie/en/storage/object-storage/s3/identity-and-access-management/

Be uncompromising in the implementation of security compliance.

If you are comfortable with these 5 rules you can rest assured. As for all security rules, a regular check-up/training is always useful!

Bonus rule – Traceability

When you are audited or you want to audit your architecture of your cloud provider it is important to have all the elements you need.

The S3 logging feature will help you provide the traceability needed in order to know who, when, and why data was accessed.

Thanks to our API, you can set up some triggers in order to be alerted in case of bad or simply abnormal behavior.

Want to know more about data protection? More blog posts are coming soon!

Meanwhile, feel free to consult our guides that will assist you in your data security implementation.

And discover OVHcloud Object Storage services with S3 API https://www.ovhcloud.com/en-ie/public-cloud/object-storage/