Kubernetes 1.33 version has just been released few days/weeks ago.

As this new release contains 64 enhancements (!), it can not be easy to know what are the interesting and useful features and how to use them.

In this blog post, let’s discover one of interesting and useful new feature: “Topology aware routing in multi-zones Kubernetes clusters”.

⚠️ Kubernetes 1.33 should be available on OVHcloud MKS clusters at the end of June/beginning of July but the demo is working also on MKS with Kubernetes 1.32 release 😉.

Topology aware routing

Since Kubernetes 1.33, the topology aware routing and traffic distribution feature is in General Availability (GA).

This feature allows to optimize service traffic in multi-zone clusters and reduce latency and cross-zone data transfer cost.

Topology Aware Routing provides a mechanism to help keep traffic within the zone it originated from.

In a context of multi-zone clusters, it helps reliability, performance, reduce costs or improve network performance.

As OVHcloud just released, in Beta, the launch of their Managed Kubernetes clusters (MKS) on 3 AZ (Availability Zones), it’s the perfect occasion for me to test this brand new Kubernetes feature 🙂.

Demo

Prerequisite: Have a Kubernetes cluster with at least 2 nodes running in 2 different zones.

If you already don’t have one, you can follow this blog post in order to create an OVHcloud MKS cluster with 3 nodes pools, one per AZ.

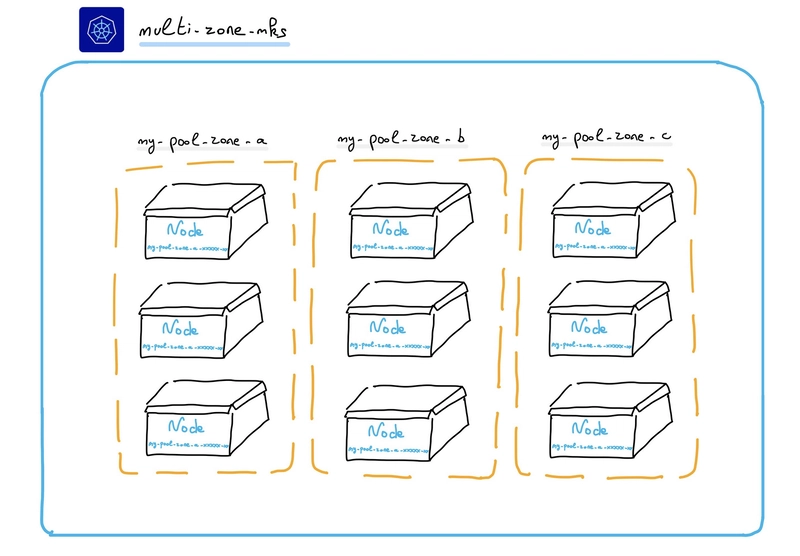

On my side I set-up a MKS cluster in 3AZ (one per node pool), with 3 nodes per node pool:

$ kubectx kubernetes-admin@multi-zone-mks

Switched to context "kubernetes-admin@multi-zone-mks".

$ kubectl get np

NAME FLAVOR AUTOSCALED MONTHLYBILLED ANTIAFFINITY DESIRED CURRENT UP-TO-DATE AVAILABLE MIN MAX AGE

my-pool-zone-a b3-8 false false false 3 3 3 3 0 100 20d

my-pool-zone-b b3-8 false false false 3 3 3 3 0 100 20d

my-pool-zone-c b3-8 false false false 3 3 3 3 0 100 20d

$ kubectl get no

NAME STATUS ROLES AGE VERSION

my-pool-zone-a-b9ztj-brgpq Ready <none> 20d v1.32.3

my-pool-zone-a-b9ztj-gt5vd Ready <none> 20d v1.32.3

my-pool-zone-a-b9ztj-mss8j Ready <none> 20d v1.32.3

my-pool-zone-b-tr6wf-5wfgz Ready <none> 20d v1.32.3

my-pool-zone-b-tr6wf-ct7fs Ready <none> 20d v1.32.3

my-pool-zone-b-tr6wf-vlkwg Ready <none> 20d v1.32.3

my-pool-zone-c-wgrl6-b2f9s Ready <none> 20d v1.32.3

my-pool-zone-c-wgrl6-lp22l Ready <none> 20d v1.32.3

my-pool-zone-c-wgrl6-slkq5 Ready <none> 20d v1.32.3⚠️ As you saw, the Kubernetes version installed on my cluster is not equals to 1.33, but the ServiceTrafficDistribution feature gate is in Beta and it is activated:

$ kubectl get --raw /metrics | grep kubernetes_feature_enabled | grep Traffic

kubernetes_feature_enabled{name="ServiceTrafficDistribution",stage="BETA"} 1A visual architecture of my MKS cluster:

⚠️ In MKS Standard clusters, don’t forget to enable the topology aware routing for 3AZ region.

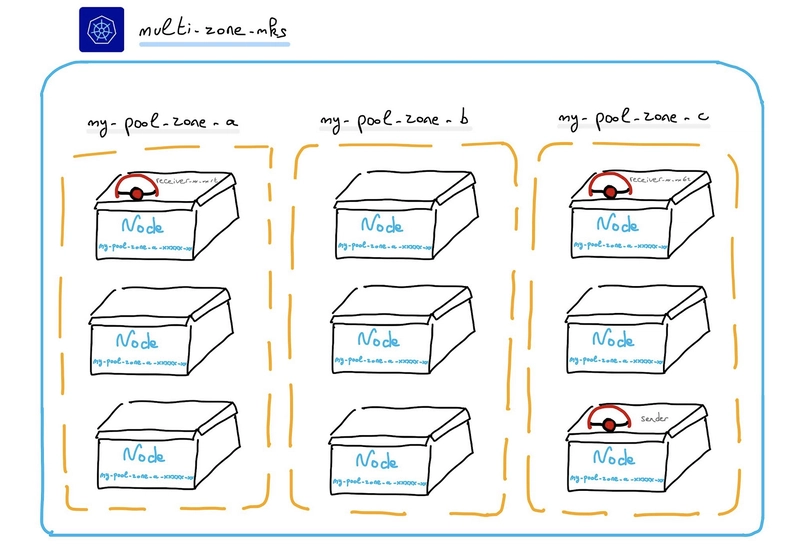

In order to test this feature, in a new namespace, we will deploy:

- a deployment with two pods named

receiver-xxx - a ClusterIP service named

svc-prefer-closewith the feature enabled - a Pod named

sender

Let’s do that!

Create a deploy.yaml file with the following content:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: service-traffic-example

name: receiver

namespace: prefer-close

spec:

replicas: 2

selector:

matchLabels:

app: service-traffic-example

template:

metadata:

labels:

app: service-traffic-example

spec:

containers:

- image: scraly/hello-pod:1.0.1

name: receiver

ports:

- containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeNameCreate a svc.yaml file with the following content:

apiVersion: v1

kind: Service

metadata:

name: svc-prefer-close

namespace: prefer-close

annotations:

service.kubernetes.io/topology-mode: auto

spec:

ports:

- name: http

protocol: TCP

port: 8080

targetPort: 8080

selector:

app: service-traffic-example

type: ClusterIP

trafficDistribution: PreferCloseAs you can see, this Service has two specific configurations.

First, we added the service.kubernetes.io/topology-mode: auto annotation to enable Topology Aware Routing for a Service.

Then, we configured the trafficDistribution to PreferClose in order to ask Kubernetes to send the traffic, preferably, to a pod that is “closed” to the sender.

Create a new namespace and apply the manifest files:

$ kubectl create ns prefer-close

$ kubectl apply -f deploy.yaml

$ kubectl apply -f svc.yamlResult:

You should have two running Pods on 2 differents Nodes.

$ kubectl get po -o wide -n prefer-close

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

receiver-7cfd89d78d-dhv6z 1/1 Running 0 94s 10.240.4.91 my-pool-zone-c-wgrl6-slkq5 <none> <none>

receiver-7cfd89d78d-hrxrt 1/1 Running 0 94s 10.240.5.63 my-pool-zone-a-b9ztj-mss8j <none> <none>OK, receiver-xxxxxxxx-dhv6z is running on my-pool-zone-c-xxxx and the other pod is running on my-pool-zone-a-xxxx. There are running on differents Availability Zones.

Now, we can create a Pod sender. it will be scheduled on a Node:

Run it and execute a curl command to test the traffic redirection to the “svc-prefer-close” Service:

$ kubectl run sender -n prefer-close --image=curlimages/curl -it -- sh

If you don't see a command prompt, try pressing enter.

~ $ curl http://svc-prefer-close.prefer-close:8080

Version: 1.0.1

Hostname: receiver-7cfd89d78d-dhv6z

Node: my-pool-zone-c-wgrl6-slkq5Let’s verify where are our Pods:

$ kubectl get po -n prefer-close -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

receiver-7cfd89d78d-dhv6z 1/1 Running 0 9d 10.240.4.91 my-pool-zone-c-wgrl6-slkq5 <none> <none>

receiver-7cfd89d78d-hrxrt 1/1 Running 0 9d 10.240.5.63 my-pool-zone-a-b9ztj-mss8j <none> <none>

sender 1/1 Running 1 (5s ago) 21s 10.240.3.134 my-pool-zone-c-wgrl6-b2f9s <none> <none>Kube-proxy sent the traffic from sender to a receiver-xx Pod on the same Availability Zone 🎉

⚠️ Note that because preferClose means “topologically proximate”, it may vary across implementations and could encompass endpoints within the same node, rack, zone, or even region.

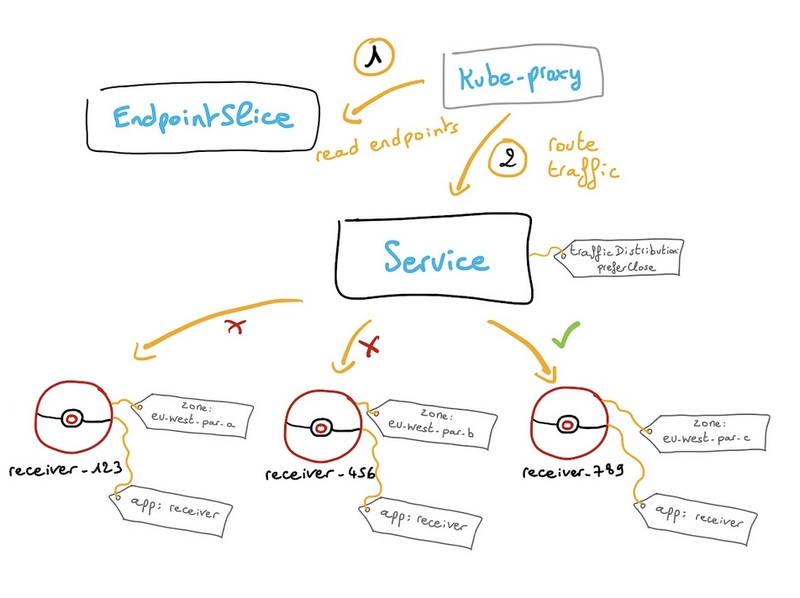

How is it working?

When calculating the endpoints for a Service, the EndpointSlice controller considers the topology (region and zone) of each endpoint and populates the hints field to allocate it to a zone.

Cluster components such as kube-proxy can then consume those hints, and use them to influence how the traffic is routed (favoring topologically closer endpoints).

So, with PreferClose value for trafficDistribution, we ask kube-proxy to redirect traffic to the nearest available endpoints based on the network topology.

That’s why the option is called PreferClose.

What’s next?

In the future you will be able to configure the trafficDistribution field with other values.

Indeed, two new values, more explicit, are currently in Alpha since the Kubernetes 1.33 release: PreferSameZone and PreferSameNode.

Personally I can’t wait to test them 😇.

Want to go further?

Want to learn more on this topic? In the coming days, we will publish a blog post about MKS Premium plan.

Visit our Managed Kubernetes Service (MKS) Premium plan in the OVHcloud Labs website to know more about Premium MKS.

Join the free Beta: https://labs.ovhcloud.com/en/managed-kubernetes-service-mks-premium-plan/

Read the documentation about the new Managed Kubernetes Service (MKS) Premium plan.

Join us on Discord and give us your feedbacks.

Developer Advocate at OVHcloud, specialized in Cloud Native, Infrastructure as Code (IaC) & Developer eXperience (DX).

She is recognized as a Docker Captain, CNCF ambassador, GDE & Women techmakers Ambassador.

She has been working as a Developer and Ops for over 20 years. Cloud enthusiast and advocates DevOps/Cloud/Golang best practices.

Technical writer, a sketchnoter and a speaker at international conferences.

Book author, she created a new visual way for people to learn and understand Cloud technologies: "Understanding Kubernetes / Docker / Istio in a visual way" in sketchnotes, books and videos.