Large Language Models (LLMs) and generative AI technologies are everywhere, infiltrating both our personal and professional daily lives. Well-known services are already diverting most internet users away from their old browsing habits, and online information consumption is being profoundly transformed, most likely with no possible return to past behaviours.

Issues related to intellectual property laws and the source of data used to train LLMs, which is sometimes confidential or personal, as well as potential biases in the data, intentional or otherwise, are regularly debated in the press and within technology communities. However, the current focus is on the race between LLM providers, who are competing to develop faster, more efficient models, in search of the ‘wow’ factor that will temporarily propel them to the rank of global AI leader.

Meanwhile, organisations are integrating these technologies into their daily activities at their own pace. Implementation is driven both by employees keen to improve their individual productivity, often based on their experience using AI tools in their personal life, and by business leaders and managers, who see an opportunity to optimise efficiency of low-value-added tasks.

At OVHcloud, we have launched an ‘AI Labs’ initiative, which is responsible for centralising projects and experiments using LLM tools. This team now supervises over a hundred projects, and new ones are added every week. The approach aims to catalyse ideas and provide a framework for efficiently implementing effective production tools.

From a data security perspective, the proliferation of experimentation and proof-of-concept (POC) projects creates numerous additional risks that need consideration. Modelling interactions between each component is necessary to understand these risks, as many configurations are possible.

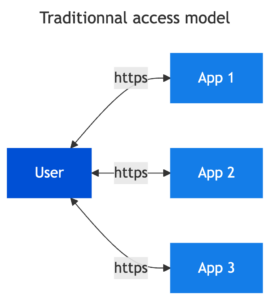

In this article we will take a look at some example use cases, identify the main risks and provide suggestions for how to address them using a risk reduction logic model. We will focus on simple use cases where a user accesses an application for their work. These applications are accessible from their work context, and each have access management mechanisms that verify the user and grant them access to the relevant data and functions associated with their business profile.

The introduction of LLM technologies fits into the usual operating mode of an information system to enrich the user experience and offer additional features. Let’s take a look at the examples.

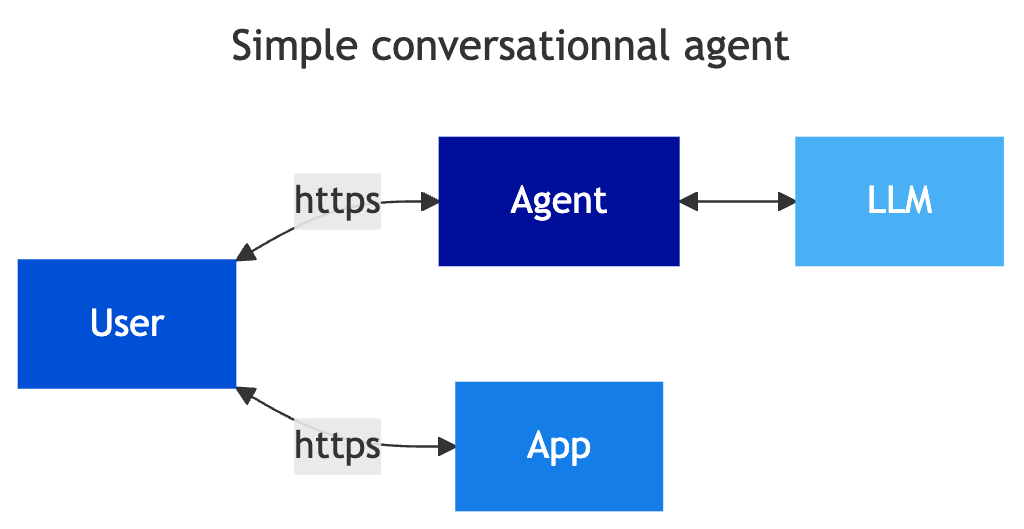

Conversational agents (without third-party integration)

Most professionals working on a computer regularly use conversational agents to ‘enhance’ their work, often without acknowledging it, for example when writing an email, summarising a document, finding a complex Excel formula, answering a legal or technical question, etc.). As these agents are not connected to the company’s information system, the risks are limited and depend on the attitude and practices of the user, for example with regards to uploading data, copying and pasting confidential data into the agent, etc.

In this context, the user is the go-between managing the information transfer between the company application and the third-party agent. The agent only has access to information voluntarily sent by the user, typically via the service interface that allows prompts to be entered. These services are rapidly extending their capabilities, allowing file upload, and microphone or camera access, but we remain in a classic responsibility framework in terms of security, with the human in the loop by design.

Examples

- Public AI services (Mistral, Openai, Grok, Omissimo, etc.)

- AI services contracted by the company from public service publishers or specialised players

- Internal chatbot

Associated security risks

- Sending sensitive data (documents, confidential data, personal data, etc.) to the AI service and losing control over this data.

- Training models on confidential data sent by users, which can lead to leaking this data to a user who should not have access to it.

Measures to implement

- User awareness

- AI charter

- Blocking services accessible from the company’s information system

- Contract with suppliers including security and confidentiality clauses for user-transmitted information

- Traffic inspection and identification of confidential data using regular expressions

- Dedicated instance for the company, fine-tuned or enriched by a RAG with company data (not very sensitive), allowing the LLM to be contextualised to the user’s context.

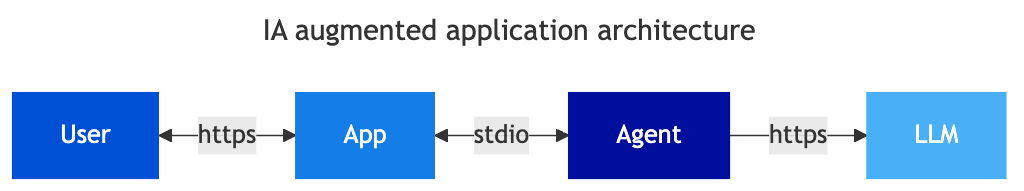

“AI Augmented” Application

The various editor solutions, in SaaS or deployed internally, are gradually enriched with functions based on LLMs, i.e. an agent on the application side that consumes an LLM with prompts designed by the editor on the data processed by the application. The editor enriches its solution within its own security model. On the user side, there is no change in usage, the application is simply enriched with new functions, for example synthesis, intelligent suggestions, translation, etc.). LLM processing can be done locally or consumed on external services.

In this use case, the publisher or application manager is responsible for data security and processing via the LLM; the user has no control and the use of these features is integrated into their usual usage. We remain in a classic security management framework, the application manager (internal or external) is the guarantor of the security of the data they process in the application. The application is enriched with new features and complexity increases, but the security model is preserved.

Examples

- Messaging and video conferencing service with AI features, for example real-time translation, discussion synthesis, automatic meeting minutes etc.

- Any ‘AI wizards’ in SaaS application

Associated security risks

- Insufficient segmentation of access rights to data in the application, allowing bypassing of usual application access controls. This is the case when the agent has a high-privilege account (to simplify and accelerate the development of features) or when access restriction is not implemented at data level.

- Prompt injection into the application

- Dependence on an uncontrolled supply chain

- Data leakage to a subcontractor

Measures to implement

- Security clauses in contracts

- Security insurance plan for application provider

- Review of subcontractor dependency chains

- Disabling unnecessary AI functions

- Deep isolation of sensitive applications

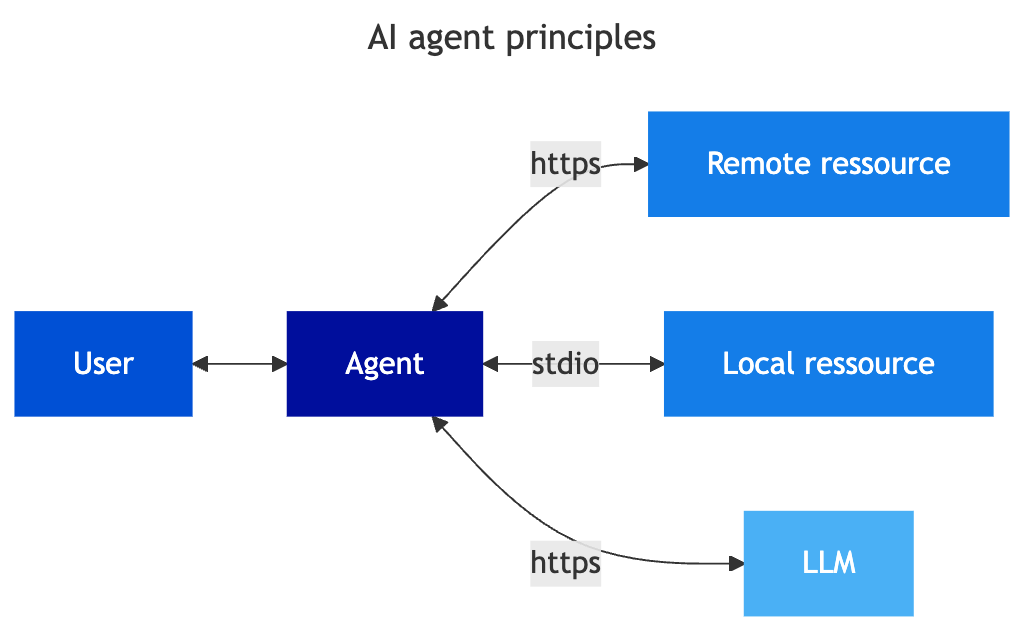

Agentic IA

We will now look at actual ‘Agentic AI’. In these cases, the agent is at the centre of the workflow. The agent becomes an orchestrator of resources. It has several roles, in particular:

- Capturing user expectations and triggering the sequence of actions

- Retrieving the necessary data to contextualise and process the request

- Sending data and instructions to a LLM to find the sequence of actions to be performed

- Managing iterations with available services and LLMs to best handle the request

- Triggering actions on accessible services

- Obtaining (eventually) user validation to validate actions

- Providing visibility to the user on actions performed and results obtained

To properly understand the risks, it is necessary to look at different types of agent implementations.

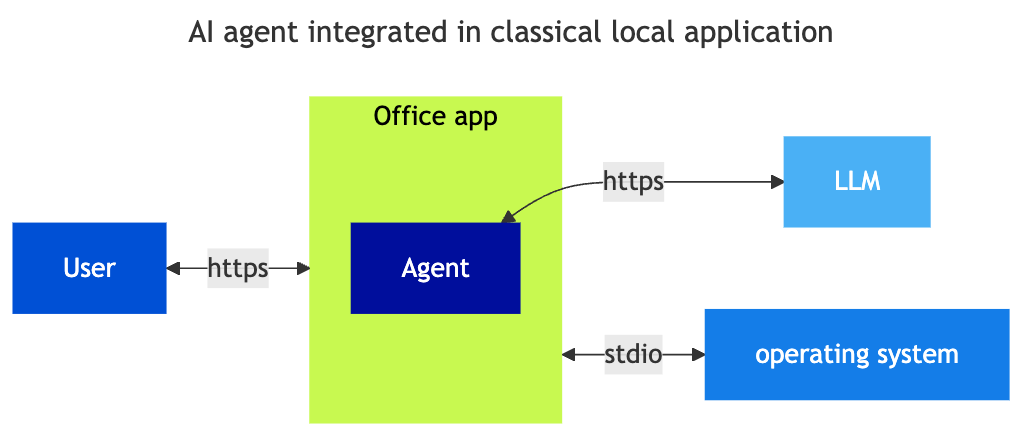

Agents integrated into local applications

Applications are gradually being enriched with the ability to connect to an LLM service. Generally, this is done via APIs to LLM services or locally on the machine. In this case, the application will integrate an agent and incorporate its use into the usual application experience. The framework is equivalent to that of an enriched SaaS application, but the configuration and calls to the LLM are made from the user’s workstation. The functionality can be native or installed in the form of a plugin.

Examples

- Microsoft Copilot AI agent

- AI function in office applications (OnlyOffice, Joplin, email client, etc.)

- Apple intelligence

Associated security risks

- Loss of control over data processed by adding connectivity functions to third-party services (be careful with default tool configurations)

- Risks are similar to “cloud” functions in applications, allowing cloud storage or sharing, often configured by default

- Leakage of LLM authentication secrets (Bearer Token)

Measures to implement

- User awareness

- Application configuration controls

- Validation of applications on workstations and smartphones

- Monitoring and inspection of network and application flows

- Local management of secrets

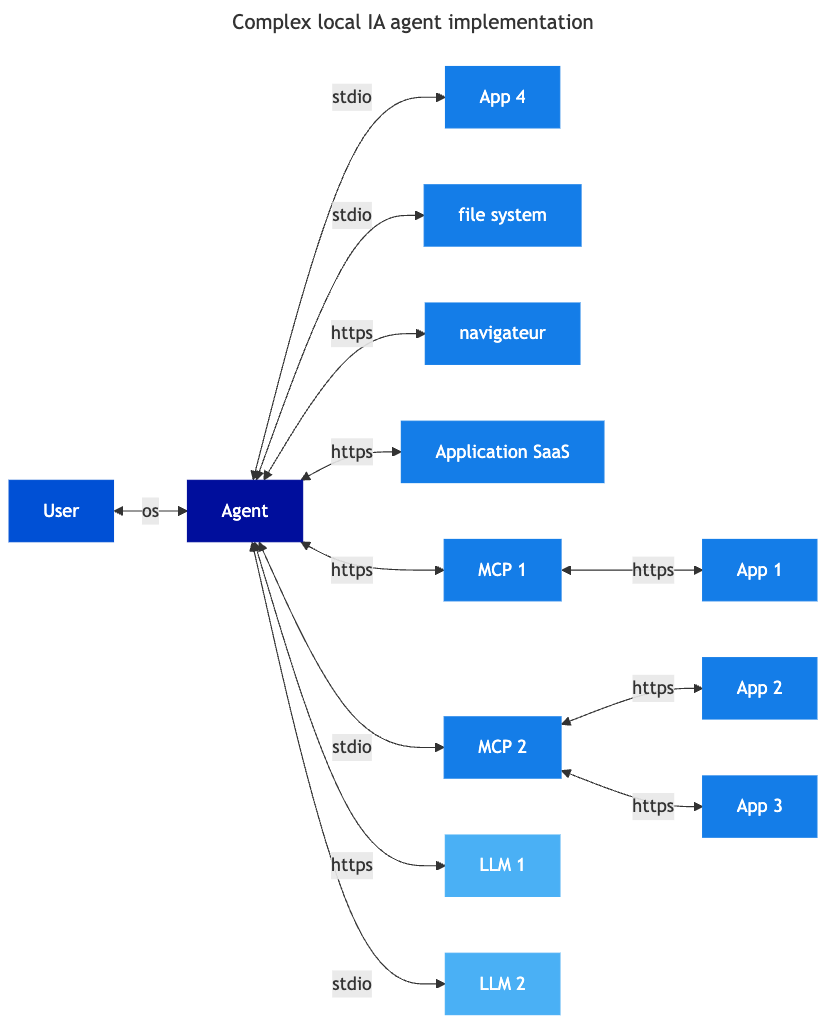

Generalist or Specialized Local Agents

Unlike the previous use case where the application is simply enriched with LLM functions, agents are applications whose primary goal is to integrate LLM functions into a workflow. The risk model is similar, but by nature, the functionalities are much richer and focused on optimising the consumption of LLM services. For example:

- Configuration of multiple LLM services in parallel

- Personalisation of system and user prompt templates by the user

- Integration of local or remote MCP services to enrich the data accessible to the agent

- Cost control function

- Optimisation of requests and context management

These agents can be generalist or specialised. In particular, this type of agent is widely used by developers within their IDE . In this context, security management relies on the user and the local configuration of tools. Capabilities may be extended with marketplace, like plugins to add connectors to external services or capabilities. The complexity of configurations, the lack of proven and hardened standards due to the relative novelty of these tools generates many risks, on an application directly run on user workstation, with all their rights.

Examples

- Generalist agents: Goose

- Specialised agents: Claude desktop, Cursor, Shai, Github Copilot, Continue, Kilo Code

Associated security risks

- Connection to third-party services without controls via marketplace (MCP connector for third-party services)

- Uncontrolled access to local file system

- Sending confidential data to third-party services (business data, secrets, .env file, etc.)

- Management of local secrets (Bearer token)

- Sharing credentials with third-party services (via OAuth mandate, etc.)

Measures to implement

- User awareness

- Application configuration controls

- Software testing and validation

- Sandboxing of agents

- Protection of secrets (environment file in development directories)

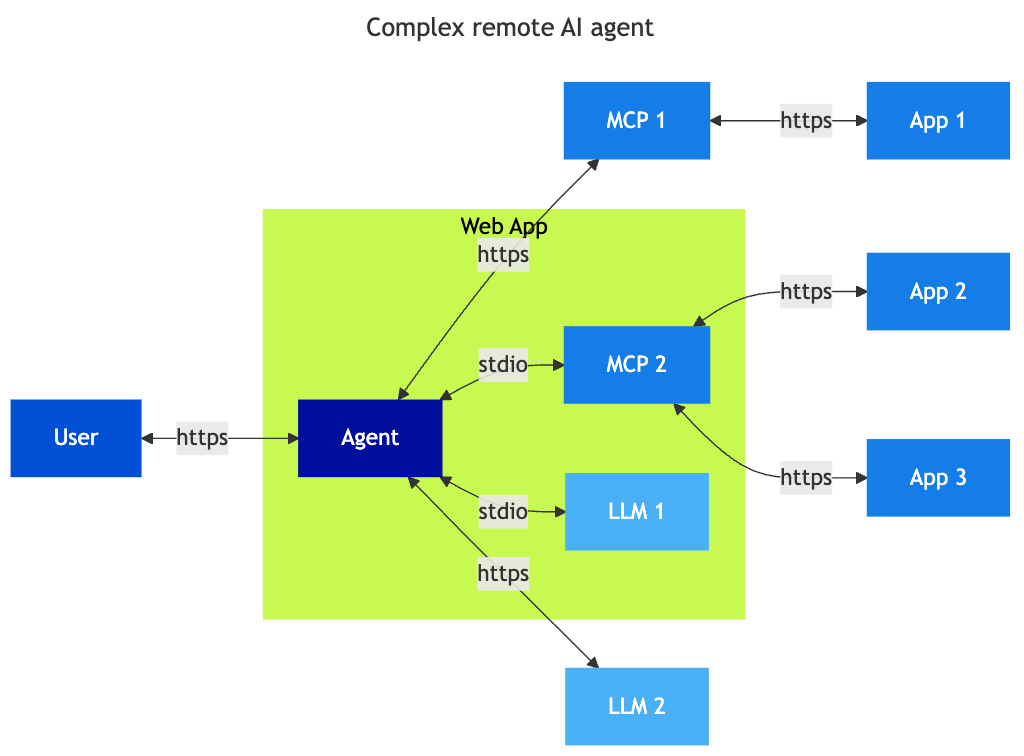

Remote Agents

Remote agents, like local agents, are applications that connect different resources (LLM, RAG, third-party services), packaged within a web application, accessible to the user through their browser. All chatbot services are gradually integrating these capabilities to enrich their service by connecting to third-party services. The operation is similar to local agents, but outside the user’s workstation.

In this case, the main challenge is managing access to third-party services and the resulting secrets. Since the agent is the focal point of the architecture, entrusting its management to a third party requires granting them access rights to third-party services to capitalise on the agent’s functionality.

In the example above, the user must grant the agent an access mandate to consume the MCPs that allow access to application services. Today, most of these mandates are managed by OAuth2 delegations, with the user authorising the agent to use these technical delegations to access applications.

Examples

- ChatGPT, MistralAI

- Agents deployed internally

Associated security risks

- Leakage of authentication secrets to sensitive applications of data

- Centralisation of secrets to access remote services

- Opening of network flows between sensitive applications and agent services

Measures to implement

- Architecture to limit network exposure

- Network inspection

- Application monitoring

- Authorisation and access control management

- Restriction of access rights to need-to-know for each task

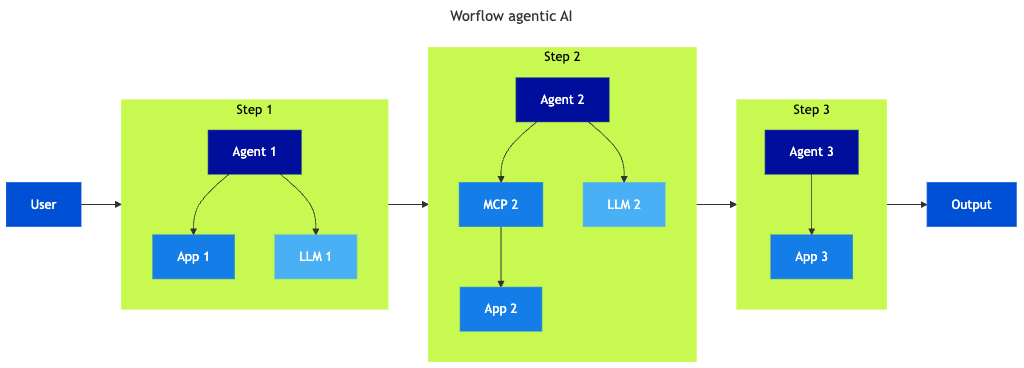

Workflow agents

Workflow agent tools are designed to build AI workflows. They may be local or remote. While all wrong behaviours listed above remain possible in this model, the workflow structure splits the workflow into small manageable parts, allowing:

- Limit of each agent’s access rights to the required sub-set of data for performing its tasks

- More deterministic approach for human control over the process

- Unitary testing for each parts

- Repeatability of the process (workflows are defined ‘as code’)

In this case, the workflow is built and operates as an automation under the control of a project team in charge of aligning the workflow with business processes. The configuration of the workflow management tools is the key to controlling the process. The orchestration platform manages the secrets and flow to resources, so it need to be managed with proper attention as any orchestration platform.

Examples

- N8N, Langchain, Zapier, Flowise AI

Associated security risks

- Increase in complexity of the workflows and interconnection

- Configuration issues

- Leak of access token

- Exposure of sensitive resources

- Shadow orchestration platforms deployed by users

- Access to temporary artifacts by platform administrators

Measures to be implemented

- Architecture to limit network exposure

- Network inspection

- Application monitoring

- Authorisation and access control management

- Secrets management

- Restriction of access rights to need-to-know for each task

Perspectives and problems to be solved

MCP and secret management

Secret management is at the heart of the problem of deploying agent-based AI. Since LLMs are not deterministic, it is necessary to constrain access rights in terms of scope and duration for LLMs, in order to limit their access to only the data and functions required to perform tasks. It is essential to identify the reliable blocks that will act as intermediaries to grant access, particularly for MCP servers. One of the challenges is to rely on existing access rights matrices without re-implementing an additional layer of rights management for MCP servers and agents, but instead implementing mechanisms to limit access dynamically as needed.

Existing or emerging standards (OAuth2, JWT, SAML, SPIFFE/SPIRE, OPA, Cedar, etc.) partially address some of these challenges, but at the cost of high management complexity, without a reference implementation compatible with all current solutions, and in a rapidly evolving market.

Human in the loop

Beyond secret management, LLMs are unpredictable because they are non-deterministic. One of the questions to be resolved is how to include humans in the decision-making chain of an agent-based process to ensure that this inherently unpredictable behaviour does not generate risks for organisations. Today, this control, known as ‘human in the loop’, is based on the agent’s internal mechanisms and the limitation of secrets shared with it by the user. Obviously, this mode of operation is not compatible with sensitive processing.

In the future, it will be necessary to build agents that offer a high level of trust, provided by trusted editors or communities, auditable and audited, ideally open-source, to entrust these agents with performing operations on a company’s information system. In parallel, it will be necessary to develop independent agent control mechanisms that ensure sandboxing, filtering, access management, and traceability functions, allowing the responsible user to master their interaction with the information system.

Towards the end of the web browser as a access vector to the information system

For about 15 years, the web browser has been the user’s entry point to information systems. While the functional richness of browsers is immense, the attack surface they expose is just as great. Browser security, even if it is perfectible, is one of the pillars of modern security, and browser editors and communities devote a significant part of their development and maintenance efforts to maintaining the level of security and managing threats.

AI agents are changing this access paradigm to the information system by providing users with dynamic and adaptive interfaces, enriched with high-value contextual functions, which is already causing a revolution in usage and the daily lives of users. It is likely that tomorrow’s browser will be an AI agent, and even more likely that current browsers will gradually become AI agents, integrating all identity and authorisation management standards under user control.

CISO OVHcloud