This blog post will first explain briefly what is the new MKS Premium plan, for who and which use case, then you will see how to deploy a new MKS cluster in 3 availability zones and how to deploy your workloads with this new architecture of Kubernetes cluster.

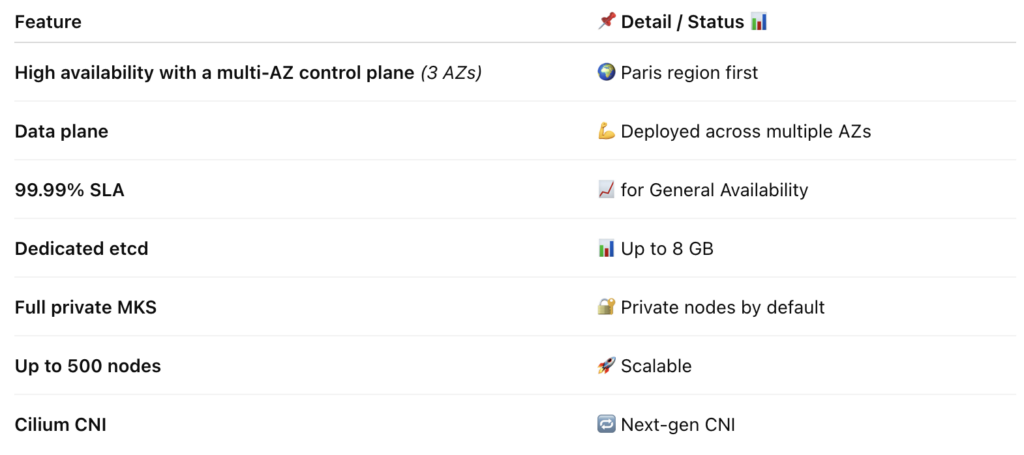

What’s inside the Premium MKS?

The 30th of April, we launched, in Beta, our brand new “Premium plan” of our Managed Kubernetes Services (MKS) 🎉

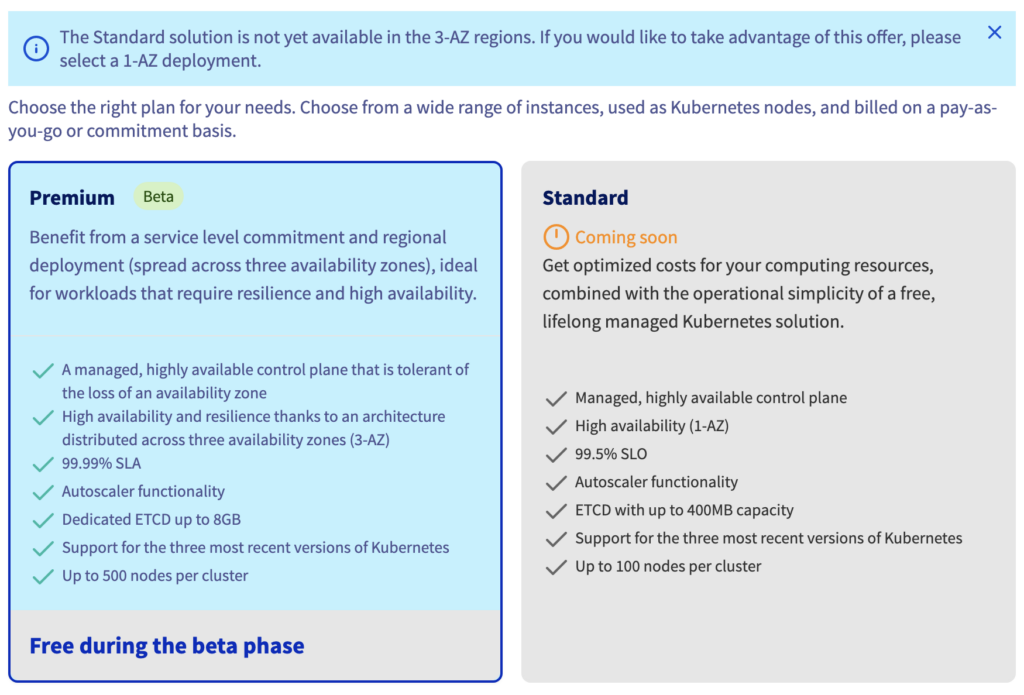

Concretely, with MKS Premium you will have:

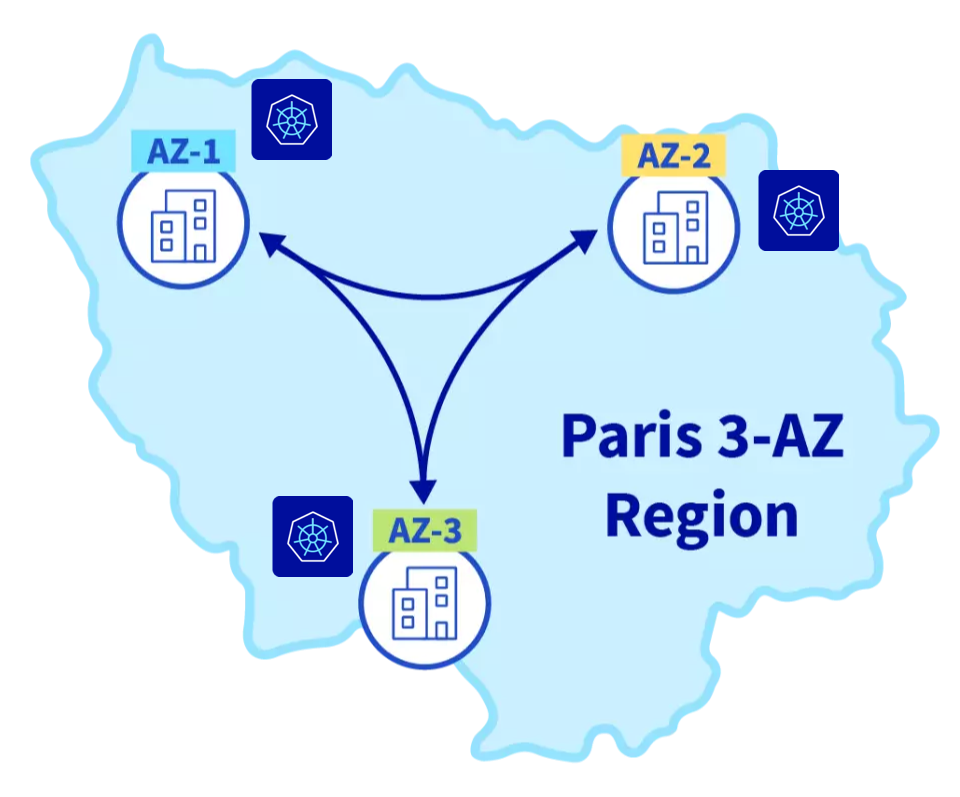

💡 For the moment, only Paris is available for the 3AZ region but several new regions will be available in the coming months including Milan.

Behind this new plan, this new version of our MKS offering actually represents a complete overhaul of our platform based on several Cloud Native Open Source projects like Cluster API, Kamaji, ArgoCD and several homemade Kubernetes operators.

For who? For what?

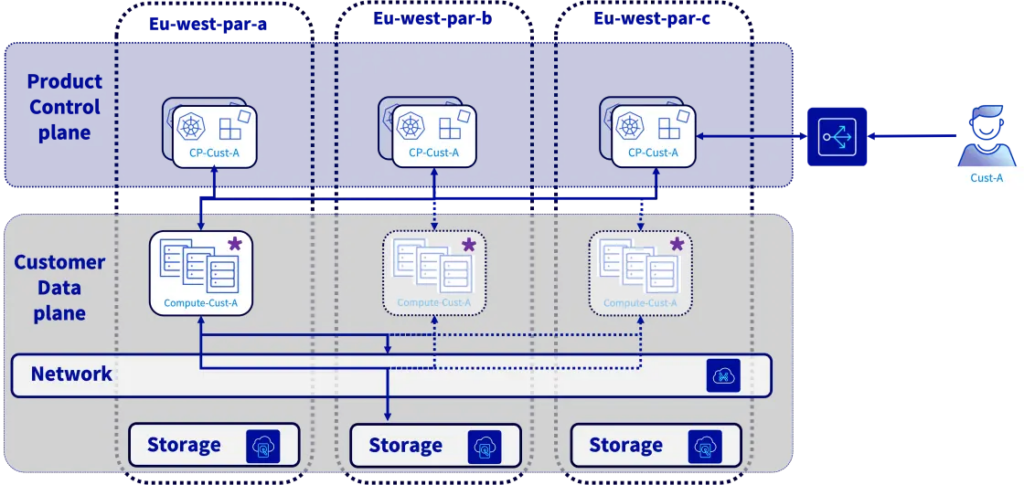

The new MKS Premium plan has been designed for those who wants high availability and scalability of their critical applications.

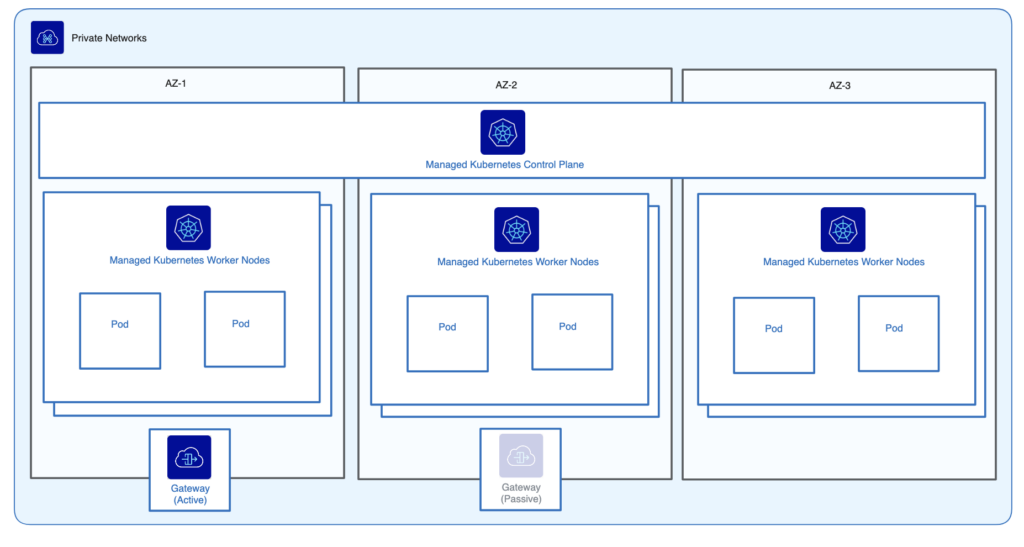

Thanks to a dedicated and fully managed control plane, resilience across multiple availability zones, dedicated resources for the Kubernetes control plane, and the ability to deploy the data plane across multiple availability zones.

You will be able to design cloud-native applications that are resilient to failures and deploy highly resilient cloud-native applications across our multi-zones region.

You will have the full control on how to deploy your worker node in our new 3AZ region (EU-WEST-PAR).

Deploying your cloud-native applications in our new Paris 3-AZ region also means enjoying the full range of services available:

- Well architected application relying on resilient managed services (MKS + Load Balancer + Gateway + DBaaS + Object Storage …),

- Advanced internal cluster networking with the new Cilium CNI

- Better API server performances and scaling capacity

- And much more to come!

Let’s deploy a MKS Premium cluster in 3 AZ at Paris!

Like the actual Standard MKS, you can deploy MKS on the 3AZ via the Control Panel (OVHcloud UI), the API and also our Infrastructure as Code (IaC) providers (Terraform/OpenTofu, Pulumi…).

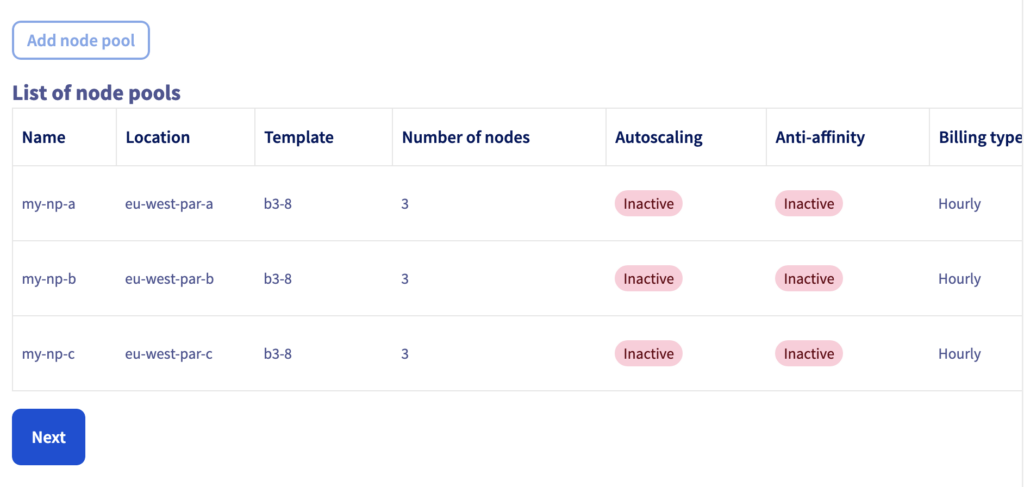

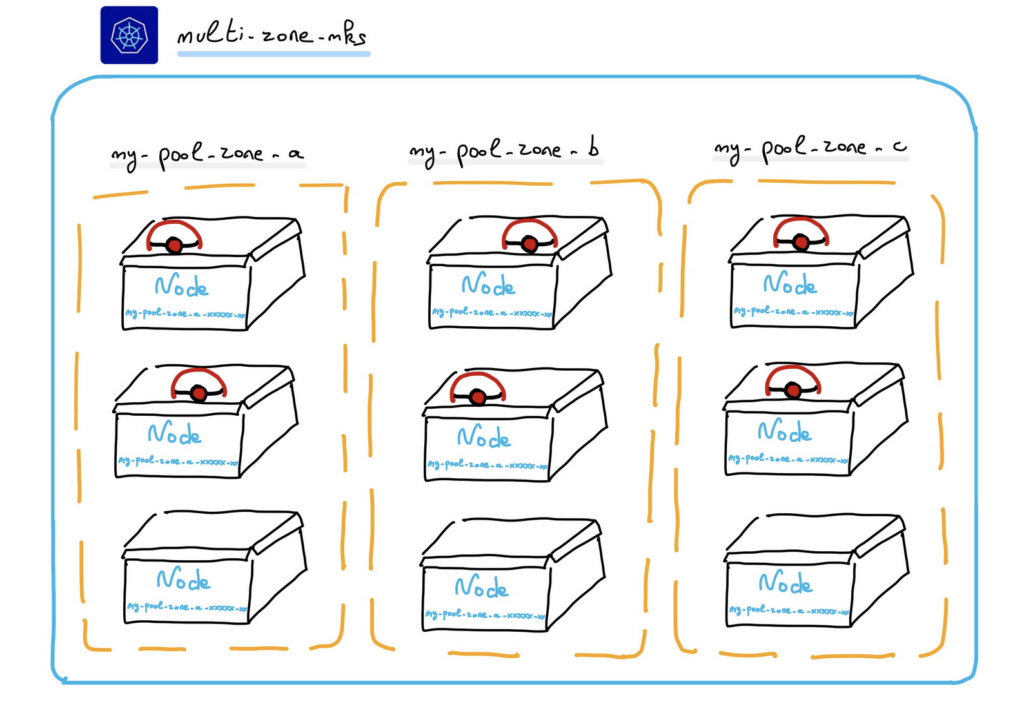

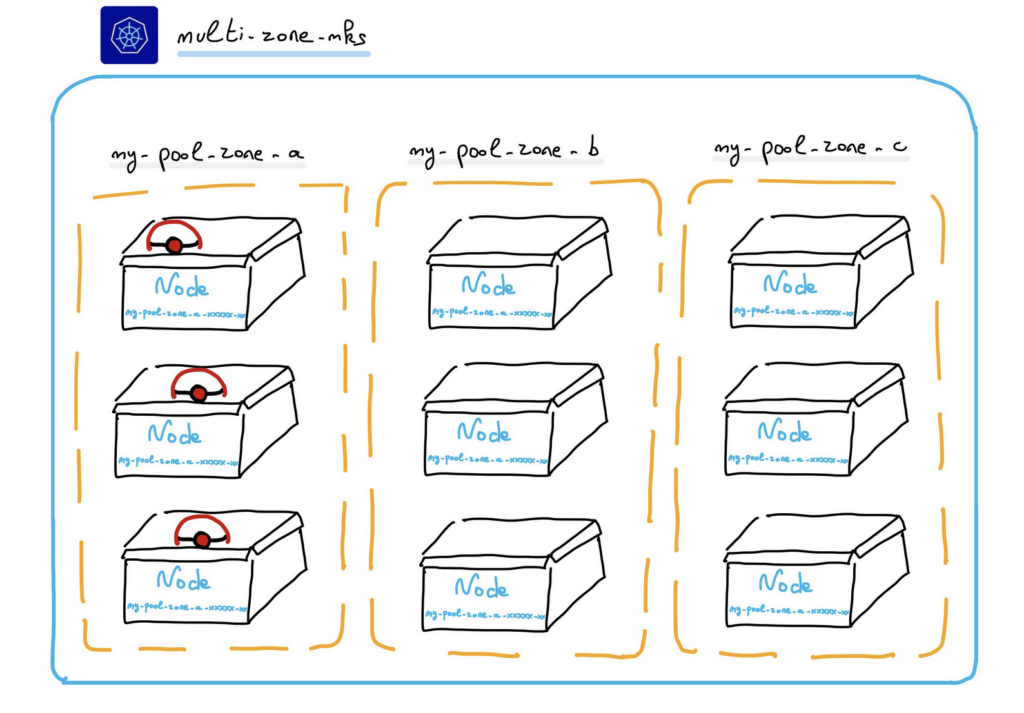

In this blog post, we will deploy a new MKS cluster, in a 3AZ region (Paris) with 3 node pools (one per availability zone).

With OVHcloud Control Panel

Log in to the OVHcloud Control Panel, go to the Public Cloud section and select the Public Cloud project concerned.

In the left panel, go in the Containers & Orchestration section, click on Managed Kubernetes Service link and click on the Create a Kubernetes cluster button

Fill the name of the cluster, choose a 3AZ region, click on Paris (EU-WEST-PAR) and select the Premium plan:

Then, select the Kubernetes version and the security policy.

⚠️ Contrary to the Standard MKS, which is public by default, the Premium MKS is private by default so it is mandatory to create a private network, a subnet and a gateway.

Then, create one node pool by Availability Zone, with 3 nodes by node pool, for example:

Confirm the creation of your cluster and wait its creation.

Finally, click on the new created cluster and get the kubeconfig file.

With Terraform

In a previous blog post, we showed you how to deploy a MKs cluster with Terraform/OpenTofu. Please read the post if you are not familiar with Terraform or OpenTofu.

Create a ovh_kube.tf file with the following content:

resource "ovh_cloud_project_network_private" "network" {

service_name = var.service_name

vlan_id = 84

name = "terraform_mks_multiaz_private_net"

regions = ["EU-WEST-PAR"]

}

resource "ovh_cloud_project_network_private_subnet" "subnet" {

service_name = ovh_cloud_project_network_private.network.service_name

network_id = ovh_cloud_project_network_private.network.id

# whatever region, for test purpose

region = "EU-WEST-PAR"

start = "192.168.142.100"

end = "192.168.142.200"

network = "192.168.142.0/24"

dhcp = true

no_gateway = false

}

resource "ovh_cloud_project_gateway" "gateway" {

service_name = ovh_cloud_project_network_private.network.service_name

name = "gateway"

model = "s"

region = "EU-WEST-PAR"

network_id = tolist(ovh_cloud_project_network_private.network.regions_attributes[*].openstackid)[0]

subnet_id = ovh_cloud_project_network_private_subnet.subnet.id

}

resource "ovh_cloud_project_kube" "my_multizone_cluster" {

service_name = ovh_cloud_project_network_private.network.service_name

name = "multi-zone-mks"

region = "EU-WEST-PAR"

private_network_id = tolist(ovh_cloud_project_network_private.network.regions_attributes[*].openstackid)[0]

nodes_subnet_id = ovh_cloud_project_network_private_subnet.subnet.id

depends_on = [ ovh_cloud_project_gateway.gateway ] //Gateway is mandatory for multizones cluster

}

resource "ovh_cloud_project_kube_nodepool" "node_pool_multi_zones_a" {

service_name = ovh_cloud_project_network_private.network.service_name

kube_id = ovh_cloud_project_kube.my_multizone_cluster.id

name = "my-pool-zone-a" //Warning: "_" char is not allowed!

flavor_name = "b3-8"

desired_nodes = 3

availability_zones = ["eu-west-par-a"] //Currently, only one zone is supported

}

resource "ovh_cloud_project_kube_nodepool" "node_pool_multi_zones_b" {

service_name = ovh_cloud_project_network_private.network.service_name

kube_id = ovh_cloud_project_kube.my_multizone_cluster.id

name = "my-pool-zone-b"

flavor_name = "b3-8"

desired_nodes = 3

availability_zones = ["eu-west-par-b"]

}

resource "ovh_cloud_project_kube_nodepool" "node_pool_multi_zones_c" {

service_name = ovh_cloud_project_network_private.network.service_name

kube_id = ovh_cloud_project_kube.my_multizone_cluster.id

name = "my-pool-zone-c"

flavor_name = "b3-8"

desired_nodes = 3

availability_zones = ["eu-west-par-c"]

}

output "kubeconfig_file_eu_west_par" {

value = ovh_cloud_project_kube.my_multizone_cluster.kubeconfig

sensitive = true

}This HCL configuration will create several OVHcloud services:

- a private network

- a subnet

- a gateway (S size)

- a MKS cluster in EU_WEST_PAR region

- one node pool in eu-west-par-a availability zone with 3 nodes

- one node pool in eu-west-par-b availability zone with 3 nodes

- one node pool in eu-west-par-c availability zone with 3 nodes

Apply the configuration:

$ terraform apply

...

ovh_cloud_project_network_private.network: Creating...

ovh_cloud_project_network_private.network: Still creating... [10s elapsed]

ovh_cloud_project_network_private.network: Creation complete after 14s [id=pn-xxxxxxxx_xx]

ovh_cloud_project_network_private_subnet.subnet: Creating...

ovh_cloud_project_network_private_subnet.subnet: Creation complete after 3s [id=c14cbb87-xxxx-xxxx-xxxx-7b9d4940d857]

ovh_cloud_project_gateway.gateway: Creating...

ovh_cloud_project_gateway.gateway: Still creating... [10s elapsed]

ovh_cloud_project_gateway.gateway: Creation complete after 13s [id=7dafdcfe-xxxx-xxxx-xxxx-240df8f93af1]

ovh_cloud_project_kube.my_multizone_cluster: Creating...

ovh_cloud_project_kube.my_multizone_cluster: Still creating... [10s elapsed]

ovh_cloud_project_kube.my_multizone_cluster: Still creating... [20s elapsed]

ovh_cloud_project_kube.my_multizone_cluster: Still creating... [30s elapsed]

...

ovh_cloud_project_kube.my_multizone_cluster: Still creating... [1m40s elapsed]

ovh_cloud_project_kube.my_multizone_cluster: Still creating... [1m50s elapsed]

ovh_cloud_project_kube.my_multizone_cluster: Still creating... [2m0s elapsed]

ovh_cloud_project_kube.my_multizone_cluster: Creation complete after 2m2s [id=0196cd9a-xxxx-xxxx-xxxx-3acbb48d6dda]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Creating...

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Creating...

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Creating...

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Still creating... [10s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Still creating... [10s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Still creating... [10s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Still creating... [20s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Still creating... [20s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Still creating... [20s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Still creating... [30s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Still creating... [30s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Still creating... [30s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Still creating... [40s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Still creating... [40s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Still creating... [40s elapsed]

...

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Still creating... [4m0s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Still creating... [4m0s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Still creating... [4m10s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Still creating... [4m10s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Still creating... [4m10s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Still creating... [4m20s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Still creating... [4m20s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Still creating... [4m20s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_c: Creation complete after 4m24s [id=0196cd9c-xxxx-xxxx-xxxx-8e1925c4c18e]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_b: Creation complete after 4m24s [id=0196cd9c-xxxx-xxxx-xxxx-96a18b9202ff]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Still creating... [4m30s elapsed]

ovh_cloud_project_kube_nodepool.node_pool_multi_zones_a: Creation complete after 4m35s [id=0196cd9c-xxxx-xxxx-xxxx-8a08cdc2e68d]

Apply complete! Resources: 7 added, 0 changed, 0 destroyed.

Outputs:

kubeconfig_file_eu_west_par = <sensitive>Our MKS in 3AZ have been deployed 🎉

To connect into it, retrieve the kubeconfig file locally:

$ terraform output -raw kubeconfig_file_eu_west_par > ~/.kube/multi-zone-mks.ymlConnect and discover your MKS cluster

Initialize or append the KUBECONFIG environment variable with the new kubeconfig files:

export KUBECONFIG=/Users/my-user/.kube/mks.yml:/Users/my-user/.kube/multi-zone-mks.ymlDisplay the node pools. Our cluster have 3 nodes pools, one per AZ:

$ kubectl get np

NAME FLAVOR AUTOSCALED MONTHLYBILLED ANTIAFFINITY DESIRED CURRENT UP-TO-DATE AVAILABLE MIN MAX AGE

my-pool-zone-a b3-8 false false false 3 3 3 3 0 100 7h8m

my-pool-zone-b b3-8 false false false 3 3 3 3 0 100 7h8m

my-pool-zone-c b3-8 false false false 3 3 3 3 0 100 7h8mYou can also display the control plane’s pods in order to discover the new components of the MKS Premium:

$ kubectl get po -n kube-systemHow To

Deploy pods accross several availability zones

Now, let’s create a Depoyment with 6 pods and ask Kubernetes to deploy them in our 3 AZ (in the three node pools).

To do that, create a nginx-cross-az.yaml file with the following content:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-cross-az

labels:

app: nginx-cross-az

spec:

replicas: 6

selector:

matchLabels:

app: nginx-cross-az

template:

metadata:

labels:

app: nginx-cross-az

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: "topology.kubernetes.io/zone"

operator: In

values:

- eu-west-par-a

- eu-west-par-b

- eu-west-par-c

containers:

- name: nginx

image: nginx:1.28.0

ports:

- containerPort: 80Thanks to the nodeAffinity feature of Kubernetes, we declare that we want 6 replicas (pods) running in 3 zones: eu-west-par-a, eu-west-par-b, eu-west-par-c.

Create a new namespace and apply the deployment:

$ kubectl create ns hello-app

$ kubectl apply -f nginx-cross-az.yaml -n hello-appAs you can see, 6 pods have been created, and they are running on the nodes located in the 3 AZ.

$ kubectl get po -o wide -l app=nginx-cross-az -n hello-app

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-cross-az-6ffd957c4-7528p 1/1 Running 0 6s 10.240.2.140 my-pool-zone-b-tr6wf-5wfgz <none> <none>

nginx-cross-az-6ffd957c4-96mnh 1/1 Running 0 6s 10.240.3.91 my-pool-zone-c-wgrl6-b2f9s <none> <none>

nginx-cross-az-6ffd957c4-b48cv 1/1 Running 0 115m 10.240.6.182 my-pool-zone-c-wgrl6-lp22l <none> <none>

nginx-cross-az-6ffd957c4-k7rwf 1/1 Running 0 115m 10.240.1.237 my-pool-zone-b-tr6wf-ct7fs <none> <none>

nginx-cross-az-6ffd957c4-pb7zp 1/1 Running 0 115m 10.240.8.195 my-pool-zone-a-b9ztj-gt5vd <none> <none>

nginx-cross-az-6ffd957c4-vhhcw 1/1 Running 0 6s 10.240.7.40 my-pool-zone-a-b9ztj-brgpq <none> <none>Deploy pods only in a desired availability zone

You can also choose to deploy a Deployment with 3 replicas, only in the AZ of your choice, only in eu-west-par-a for example.

Create a nginx-one-az.yaml file with the following content:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-one-az

labels:

app: nginx-one-az

spec:

replicas: 3

selector:

matchLabels:

app: nginx-one-az

template:

metadata:

labels:

app: nginx-one-az

spec:

nodeSelector:

topology.kubernetes.io/zone: eu-west-par-a

containers:

- name: nginx

image: nginx:1.28.0

ports:

- containerPort: 80Deploy the manifest file in your cluster:

$ kubectl apply -f nginx-one-az.yaml -n hello-app

deployment.apps/nginx-one-az createdAs you can see, our three pods are running in the PAR region only in the zone-a nodes:

$ kubectl get po -o wide -l app=nginx-one-az -n hello-app

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-one-az-6b5f9bdccc-8vv9l 1/1 Running 0 98s 10.240.7.13 my-pool-zone-a-b9ztj-brgpq <none> <none>

nginx-one-az-6b5f9bdccc-ck99s 1/1 Running 0 100s 10.240.5.216 my-pool-zone-a-b9ztj-mss8j <none> <none>

nginx-one-az-6b5f9bdccc-tlg4d 1/1 Running 0 96s 10.240.8.221 my-pool-zone-a-b9ztj-gt5vd <none> <none>Want to go further?

Want to learn more on this topic? In the coming days, we will publish a blog post about MKS Premium plan.

Visit our Managed Kubernetes Service (MKS) Premium plan in the OVHcloud Labs website to know more about Premium MKS.

Join the free Beta: https://labs.ovhcloud.com/en/managed-kubernetes-service-mks-premium-plan/

Read the documentation about the new Managed Kubernetes Service (MKS) Premium plan.

Join us on Discord and give us your feedbacks.

Developer Advocate at OVHcloud, specialized in Cloud Native, Infrastructure as Code (IaC) & Developer eXperience (DX).

She is recognized as a Docker Captain, CNCF ambassador, GDE & Women techmakers Ambassador.

She has been working as a Developer and Ops for over 20 years. Cloud enthusiast and advocates DevOps/Cloud/Golang best practices.

Technical writer, a sketchnoter and a speaker at international conferences.

Book author, she created a new visual way for people to learn and understand Cloud technologies: "Understanding Kubernetes / Docker / Istio in a visual way" in sketchnotes, books and videos.