RAG vs. Fine-Tuning

Choosing the Right Method for External Knowledge

In AI development, incorporating proprietary data and external knowledge is crucial. Two key methodologies are Retrieval Augmented Generation (RAG) and fine-tuning. Here’s a quick comparison.

𝐑𝐞𝐭𝐫𝐢𝐞𝐯𝐚𝐥 𝐀𝐮𝐠𝐦𝐞𝐧𝐭𝐞𝐝 𝐆𝐞𝐧𝐞𝐫𝐚𝐭𝐢𝐨𝐧 (𝐑𝐀𝐆) 🔍

RAG combines an LLM’s reasoning with external knowledge through three steps:

1️⃣ Retrieve: Identify related documents from an external knowledge base.

2️⃣ Augment: Enhance the input prompt with these documents.

3️⃣ Generate: Produce the final output using the augmented prompt.

The retrieve step is pivotal, especially when dealing with large knowledge bases. Vector databases are often used to manage and search these extensive datasets efficiently.

Implementing a RAG-Chain with Vector Databases: time to recall the post “AI concept in a Nutshell: LLM series – Embeddings & Vectors ” from 1 month ago!

𝐅𝐢𝐧𝐞-𝐓𝐮𝐧𝐢𝐧𝐠 🛠️

Fine-tuning adjusts the LLM’s weights using proprietary data, extending its capabilities to specific tasks.

Approaches to Fine-Tuning:

1️⃣ Supervised Fine-Tuning: Uses demonstration data with input-output pairs.

2️⃣ Reinforcement Learning from Human Feedback: Requires human-labeled data and optimizes the model based on quality scores.

Both approaches need careful decision-making and can be complex.

𝐖𝐡𝐞𝐧 𝐭𝐨 𝐔𝐬𝐞 𝐑𝐀𝐆 𝐨𝐫 𝐅𝐢𝐧𝐞-𝐓𝐮𝐧𝐢𝐧𝐠? 🤔

· RAG: Great for adding factual knowledge without altering the LLM. Easy to implement but adds extra components.

· Fine-Tuning: Best for specializing in new domains. Offers full customizability but requires labeled data and expertise. May cause catastrophic forgetting.

Choose based on your needs and resources. Both methods have their strengths and challenges, making them valuable tools in AI development.

LLM Temperature

I love the analogy shared during one of the break-out sessions at the OVHcloud summit: LLM temperature is like blood alcohol level – the higher it is, the more unexpected the answers! 😂

To be more specific, temperature is a parameter that controls the randomness of the model’s output. A higher temperature encourages more diverse and creative responses, while a lower temperature makes the output more deterministic and predictable.

🔍 Key Points:

🔹 High Temperature: More random and creative outputs, useful for brainstorming and generating novel ideas.

🔹 Low Temperature: More predictable and coherent outputs, ideal for factual information and structured content.

Understanding and adjusting the temperature can help tailor LLM outputs to specific needs, whether you’re looking for creativity or precision.

Low-rank adaptation (LoRA): Turning LLMs and other foundation models into specialists

Before a foundation model is ready to take on real-world problems, it’s typically fine-tuned on specialized data and its billions, or trillions, of weights are recalculated. This style of conventional fine-tuning is slow & expensive.

⚡ LoRA is a quicker solution. With LoRA, you fine-tune a small subset of the base model’s weights, creating a plug-in module that gives the model expertise in, for example, biology or mathematical reasoning at inference time. Like custom bits for a multi-head screwdriver, LoRAs can be swapped in and out of the base model to give it specialized capabilities.

By detaching model updates from the model itself, LoRA has become the most popular of the parameter-efficient fine-tuning (PEFT) methods to emerge with generative AI.

The LoRA approach also makes it easier to add new skills and knowledge without overwriting what the model previously learned, a phenomenon known as catastrophic forgetting. LoRA offers a way to inject new information into a model without sacrificing performance.

But perhaps its most powerful benefit comes at inference time. Loading LoRA updates on and off a base model with the help of additional optimization techniques can be much faster than switching out fully tuned models. With LoRA, hundreds of customized models or more can be served to customers in the time it would take to serve one fully fine-tuned model.

Chain of Thought (CoT)

One of the most effective techniques to improve the performance of a prompt is the Chain of Thought (CoT).

The principle consists of breaking down a problem into several tasks or summarizing all the data available upstream.

Examples:

1) 𝗖𝗼𝗻𝘁𝗲𝗻𝘁 𝗴𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝗼𝗻: Rather than directly asking the LLM to generate a summary, we can first ask it to generate a table of contents and then a summary.

The final result will therefore have a better chance of being exhaustive on the content of the initial document.

2) 𝗖𝗿𝗲𝗮𝘁𝗶𝗼𝗻 𝗼𝗳 𝘀𝘁𝗿𝘂𝗰𝘁𝘂𝗿𝗲𝗱 𝗲𝗻𝘁𝗶𝘁𝗶𝗲𝘀: The CoT allows you to control the format of generation of structured entities (for example in a YAML format that we will have detailed).

These examples will greatly reduce the chances of having hallucinations in the final response of the LLM.

3) 𝗔𝗴𝗲𝗻𝘁 𝗪𝗼𝗿𝗸𝗳𝗹𝗼𝘄: For an LLM Agent, it is preferable to ask the LLM to explicitly generate a plan of the actions it must perform to accomplish its task.

“Start by making a plan of the actions you will have to perform to solve your task. Then answer with the tools you want to use in a YAML block, example: […]”

4) 𝗥𝗔𝗚 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝗼𝗻: Using CoT can improve the quality of the generated response while reducing hallucinations. Asking the LLM to summarize the relevant information contained in the documents will strengthen this semantic field when it has to generate the final response.

Conclusion

The chain of thought allows to strengthen the semantic field of the expected result in two different ways:

- Explicit chain of thought: by giving examples of the expected result in the prompt

- Internal chain of thought: by telling the LLM to generate intermediate “reasoning” steps

This increases latency and costs but in many situations the result is worth the investment

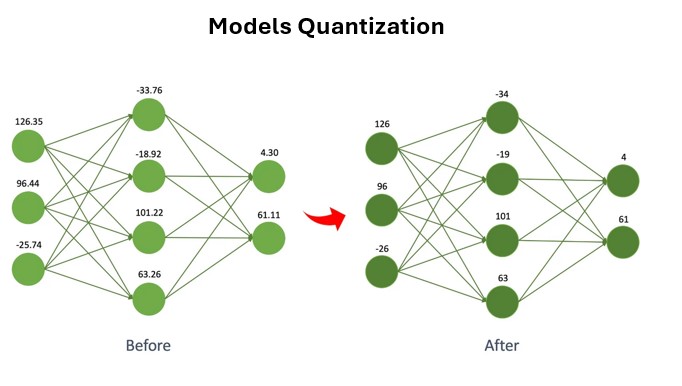

LLM Quantization

Quantization in AI is a technique used to reduce the precision of the numbers that represent the model’s parameters, which are typically weights and activations.

The two most common quantization cases are float32 -> float16 and float32 -> int8.

This reduction in precision leads to several benefits:

1️⃣ 𝗠𝗼𝗱𝗲𝗹 𝗦𝗶𝘇𝗲 𝗥𝗲𝗱𝘂𝗰𝘁𝗶𝗼𝗻: Lower precision means fewer bits are needed to store each parameter, resulting in a smaller model size.

2️⃣ 𝗙𝗮𝘀𝘁𝗲𝗿 𝗜𝗻𝗳𝗲𝗿𝗲𝗻𝗰𝗲: Lower-precision computations can be performed more quickly, especially on hardware designed for integer operations.

3️⃣ 𝗥𝗲𝗱𝘂𝗰𝗲𝗱 𝗣𝗼𝘄𝗲𝗿 𝗖𝗼𝗻𝘀𝘂𝗺𝗽𝘁𝗶𝗼𝗻: Lower-precision operations consume less power, which is crucial for edge devices like smartphones and IoT sensors.

For example, a deep learning model used for image classification might originally use 32-bit floats for its weights. By applying quantization, these weights can be converted to 8-bit integers. This reduces the model size by a factor of 4 and significantly speeds up inference, making it more efficient to deploy on resource-constrained devices.

In practice, quantization can be applied post-training or during training (quantization-aware training) to minimize any potential loss in model accuracy.

Fostering and enhancing impactful collaborations with a diverse array of AI partners, spanning Independent Software Vendors (ISVs), Startups, Managed Service Providers (MSPs), Global System Integrators (GSIs), subject matter experts, and more, to deliver added value to our joint customers.

🏁 CAREER JOURNEY – Got a Master degree in IT in 2007 and started career as an IT Consultant

In 2010, had the opportunity to develop business in the early days of the new Cloud Computing era.

In 2016, started to witness the POWER of Partnerships & Alliances to fuel business growth, especially as we became the main go-to MSP partner for healthcare French projects from our hyperscaler.

Decided to double down on this approach through various partner-centric positions: as a managed service provider or as a cloud provider, for Channels or for Tech alliances.

➡️ Now happy to lead an ECOSYSTEM of AI players each bringing high value to our joint customers.